yee245

-

Posts

69 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Blogs

Posts posted by yee245

-

-

I guess I was just mainly getting at the fact that some benchmarks don't/can't actually use the extra GPU cores, even if they're there. While maybe not quite the same situation (since they're already categorized as single-threaded anyway), but it would kind of be like if something like Superpi or Pifast gave points for each different core or thread or processor count, even though it's single-threaded. You don't get to sub more categories and get extra points for Superpi just because you're using a dual- or quad-Xeon setup. As I understand it, some of those benchmarks, like Port Royal, Night Raid, etc. will only utilize 2 GPUs, even if you have more, just by the nature of the benchmark. But, instead of it being only a single ranking system for Superpi/Pifast, some of those 3D benchmarks are really only "single-threaded" or "2-threaded" GPU benchmarks. Any additional GPU cores will just sit idle. That said, I don't know how something like Different SLI may or may not help with making more GPU cores work together.

I just don't know the difficulty of selectively limiting certain of the benchmarks to only give points for certain GPU counts. Or, if it should just be left to people to decide time/effort benchmarking things that may be inefficient but still give you a score and boints (like if you were to benchmark some of those high-core-count multi-Xeons/Epyc setups without disabling cores/threads... for Time Spy or something... getting really inefficient scores).

-

Regarding globals for 3x/4x cards setups, I doubt it would be trivial to implement, but if some of the options could just be disabled, or have points disabled only for certain benchmarks that just don't actually support that many GPUs, maybe it would solve some of the issues of getting "free" global points. For example, I think it's Port Royal (and Night Raid) that just won't actually use more than 2 GPUs, even if you have a functional 3/4-way setup. Similarly, I think the Wildlife benchmarks state in their documentation that it doesn't support SLI, so any submissions with 2/3/4-way SLI are actually still effectively just a single card benchmark. WL isn't so much an issue regarding points, since it currently gives none, but I am currently benefitting from the fact that 3/4-way SLI will technically run Port Royal with older GPUs and give a score, even if it doesn't scale past 2 cards anyway, but PR does give globals for those 3/4-way configs.

Other 3D benchmarks that currently give globals do in fact support 4-way configs, like FSE, TSE, Vantage, etc., and they're filterable options in 3DMark's Hall of Fame pages.

Then, you run into other potential "issues" with removing points/globals from the multi GPU setups. For example, GPUPI v3.3 32B gives globals, and there are subs for 1/2/3/4/5/6/7/8x GPUs, which just benefits from having multiple GPUs, but not necessarily requiring functional SLI or anything to run, like the actual graphical-related benches. So, do you award globals for only 1 or 2 GPU setups, but not more, or do you just remove globals entirely from that benchmark? And same with the other 3D benchmarks above that do support 3/4 GPU configs.

-

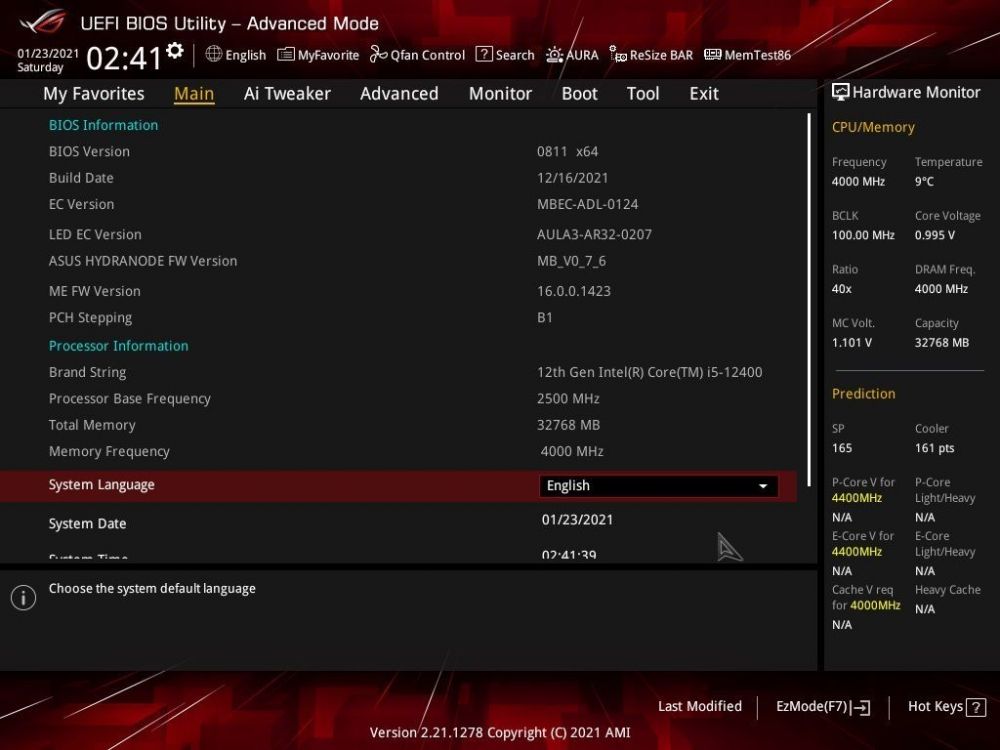

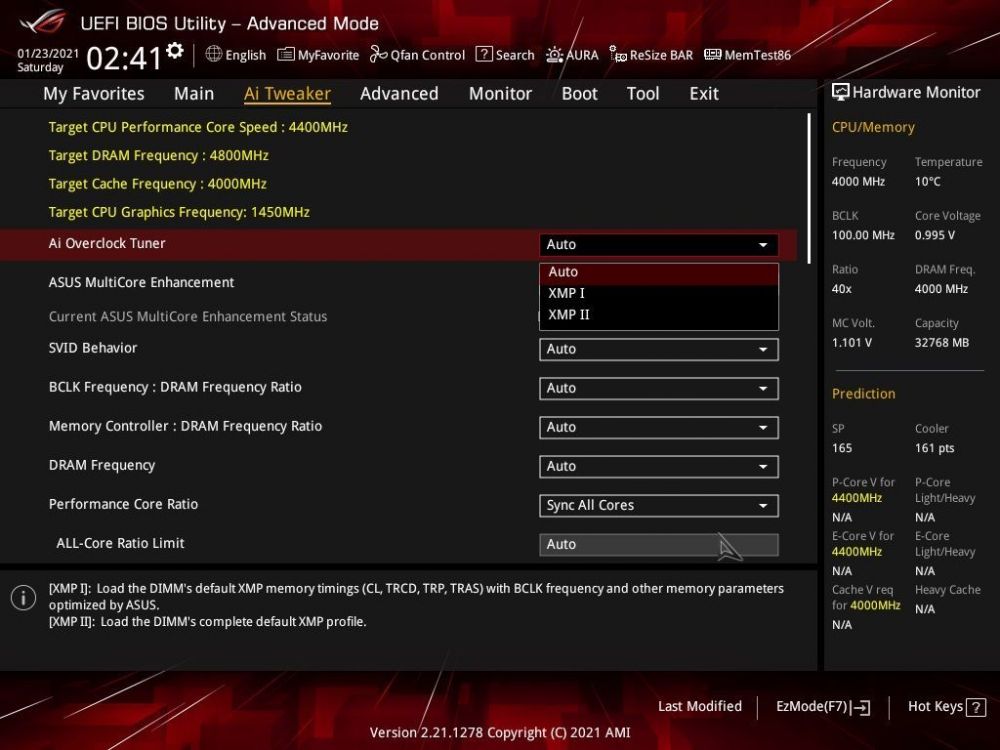

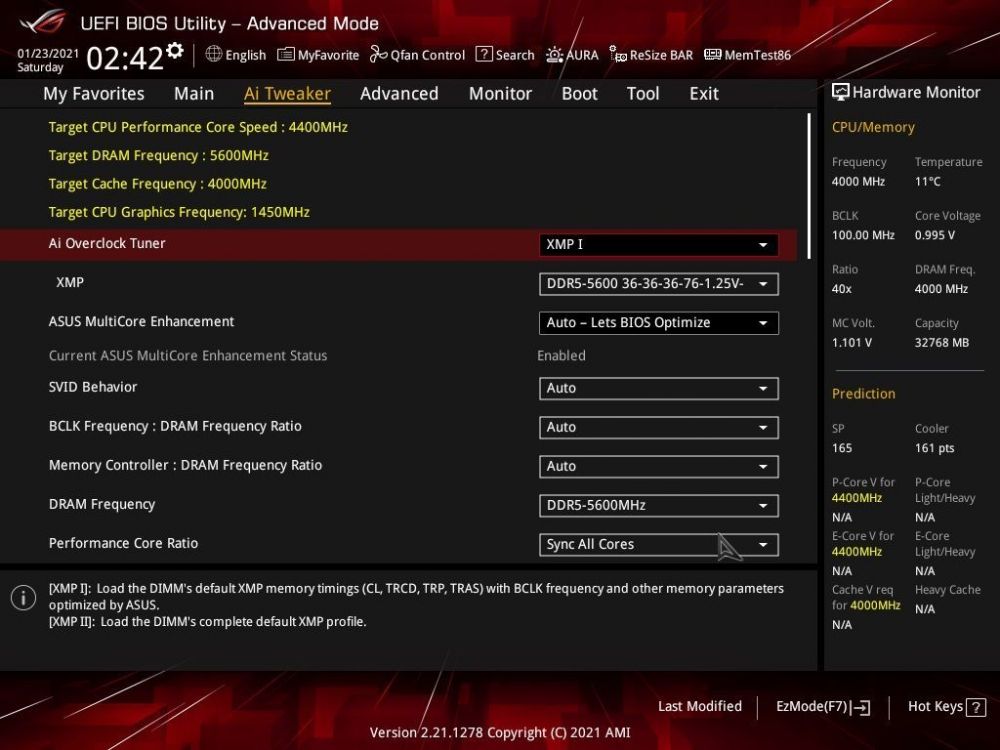

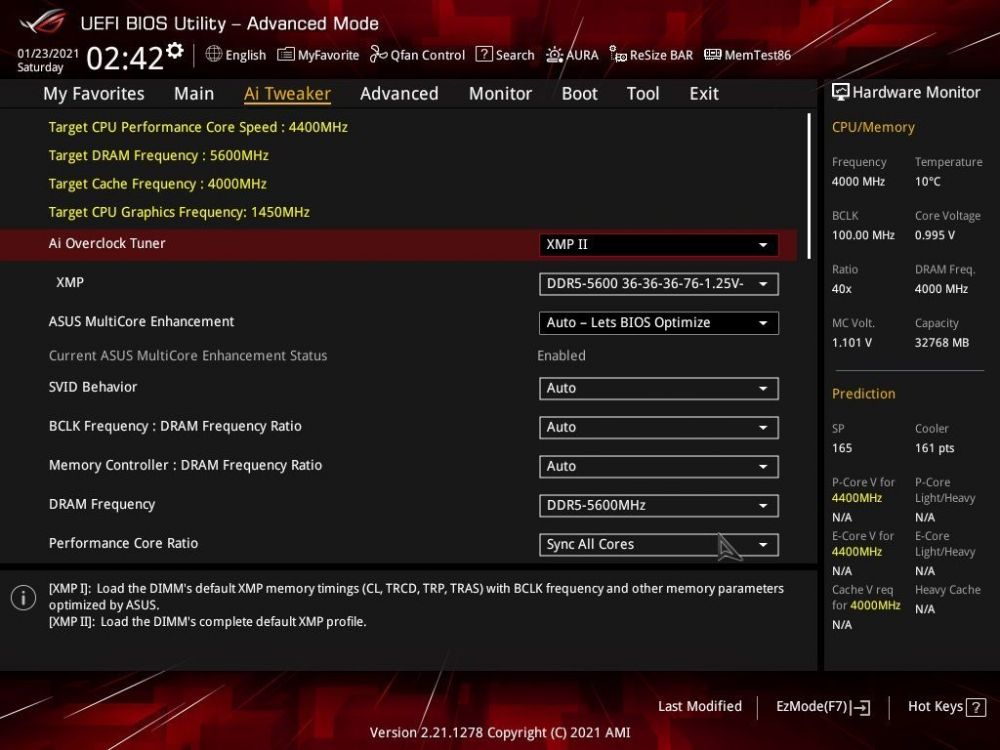

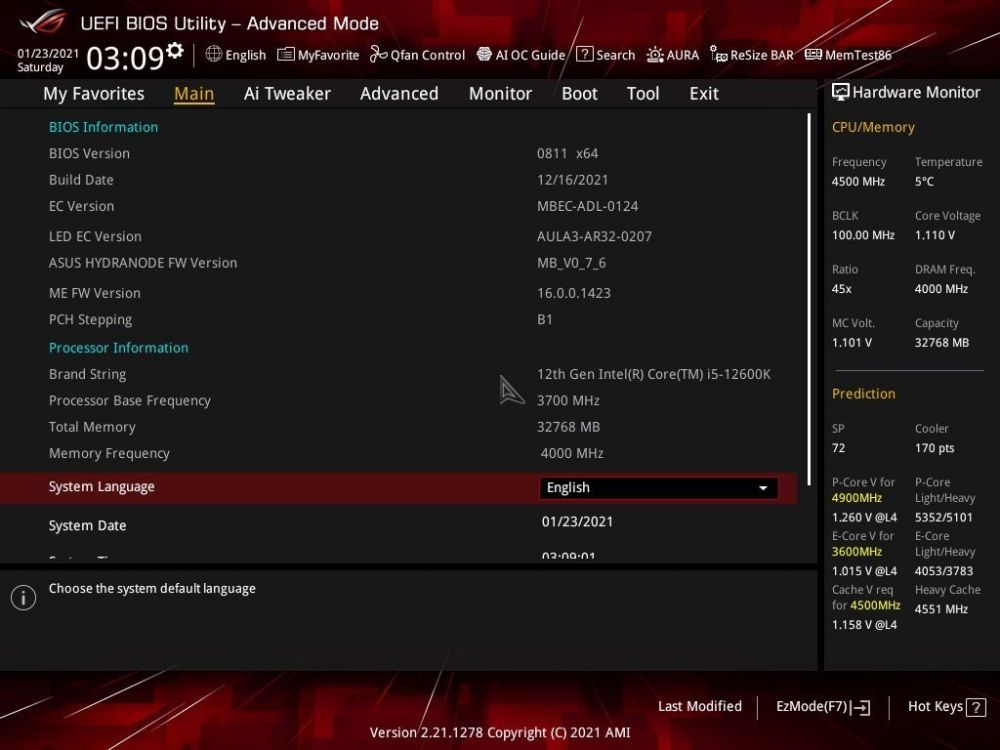

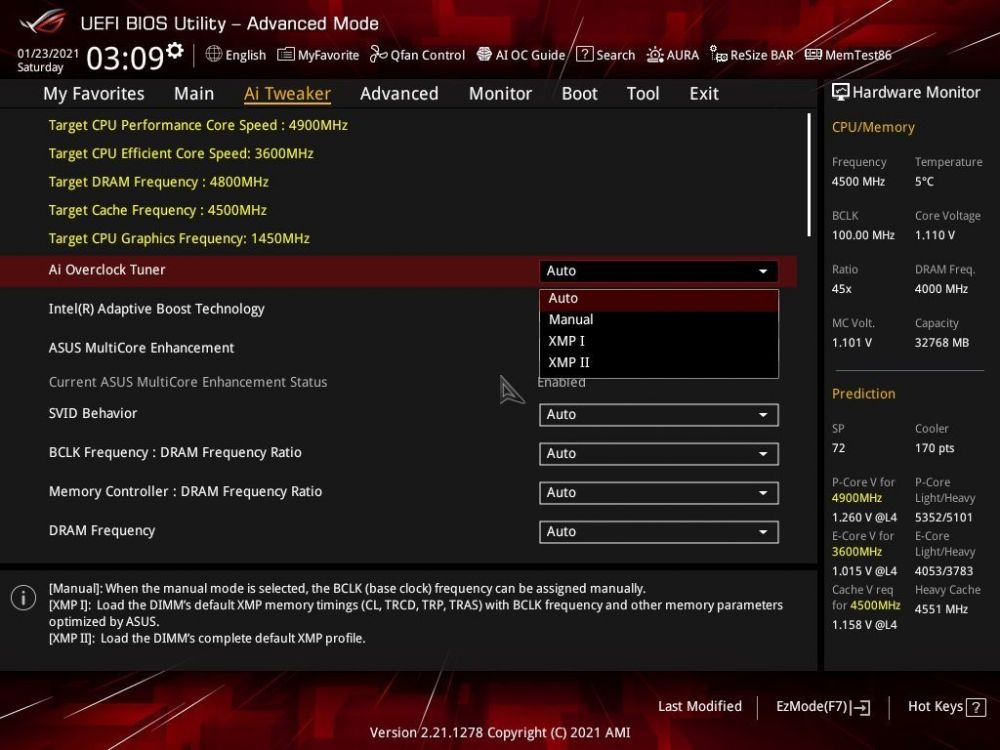

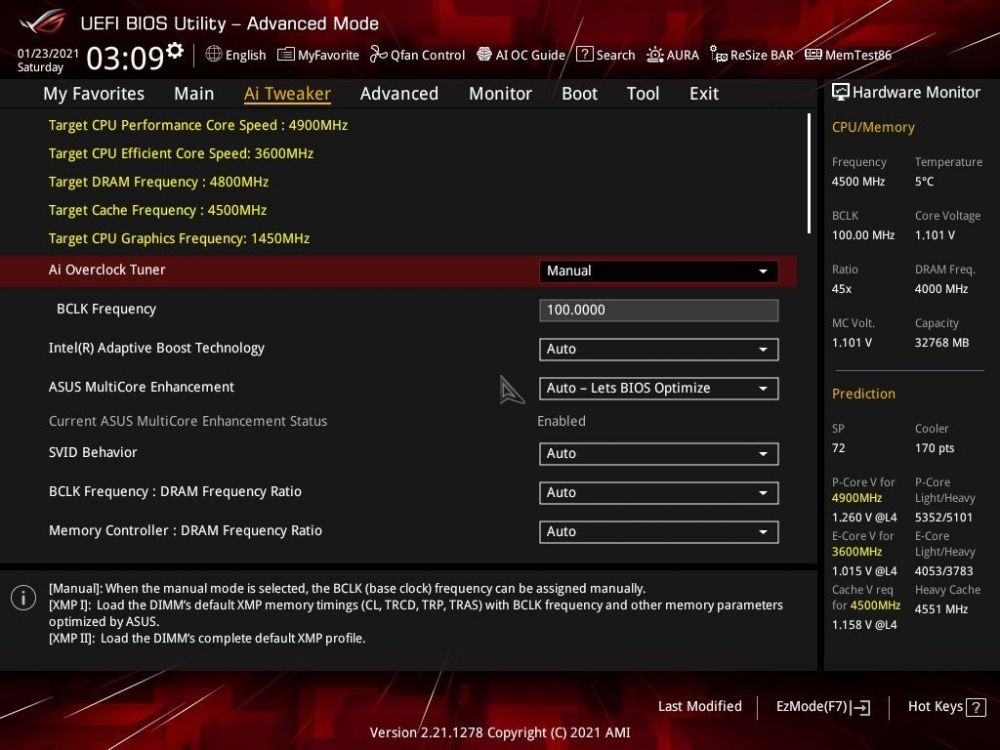

Just wanted to contribute to say that the Asus Strix Z690-G board (the mATX DDR5 one) does not appear to support this BCLK overclocking. With the current latest 0811 BIOS (the same that it appears to work with on the other higher end boards), in the "Ai Overclock Tuner" dropdown, I only have Auto, XMP I, and XMP II when run with an i5-12400. I don't appear to have the Manual mode that it appears that the B660 boards seem to have. Setting it to either XMP option does not reveal a BCLK field, nor does it change anything in the Ai Tweaker menu. As a note, when an i5-12600K is put in, I do get the "Manual" option in that dropdown, and the BCLK Frequency setting.

-

1

1

-

-

Using Yos's phase change after the bench meet, in between doing some testing for country cup. Hindsight, maybe I should have actually tried more than 5ghz...

-

I was looking at some of the stages, and for something like the 3dmark11 Physics stage, the rules mention SystemInfo 5.43 only. It appears there was actually a new version of SystemInfo that released a few days ago (on the 29th) that's version 5.44. should the rules be adjusted to be something like "5.43 and newer"?

Edit: looks like a number of the other GPU stages have this as well.

-

Just wanted to check in to see about the clarification regarding Xeons, like on the y-cruncher stage, and whether they're allowed or not.

-

Accidentally subbed as a cloudgate rather than a skydiver. Already made a corrected sub in the correct category

Please delete: https://hwbot.org/submission/4839693

Thanks.

(Turns out if you had selected something other than the first item in the dropdown from the box (which automatically goes to the submission page for that benchmark), then navigate back to the page (back button in the browser), despite it visibly showing that other option on the page you navigate back to, if you click the "submit" button, it will actually then take you back to a benchmark submission page that is for whatever the first item in the dropdown is, not the other one you had previously selected (before navigating back in the browser). I did this accidentally, and then didn't notice that I was effectively subbing under cloudgate (which is the 1st option in the 3dmark dropdown) instead of a skydiver.)

-

Not that I think they'd necessarily be part of the "optimal" set in any given stage as they stand at the moment, but it would be interesting to see how/if they'd affect that y-cruncher 2.5B stage. I figure the "optimal" set of subs would be something like 7350K,

5300G/5350G(forgot it was an Intel cpu only stage, but point somewhat still stands), 11600K, 9920X/10920X. It would be interesting to see if some random LGA 3647 Xeon could throw a wrench into things. Was just kind of curious, but not necessarily asking for them to be allowed in any given spot. -

Similar to socket count, are Xeons not allowed, except where explicitly stated as being allowed?

-

On 10/1/2021 at 12:42 PM, Leeghoofd said:

We will do XTU 2 only

4 hours ago, yosarianilives said:Dog pile I'd enjoy cause always nice to bring out collection but again idk about in the comp...

CC 2021: dogpile XTU 2? ?

-

Yeah, I noticed that happened too with a previous Superposition result when I subbed something for the team cup. It would appear that Unigine's site only allows for single submission to be public facing/published at any given point.

-

Okay, thanks for the clarification. Just one last one, but once you pick a platform, can you change CPUs on the one mobo, or is that considered part of the base platform? Basically, the only changeable part across stages would be the RAM (or other unrelated parts, like maybe a PSU for whatever reason)?

-

On 8/10/2021 at 12:16 AM, Leeghoofd said:

Olympic principle peeps, submit in all 3 stages and you are already in for the lucky draw raffle...

Yes as long as it is a DDR4 platform sporting Corsair memory sticks you are fine ( no ES hardware/bios ), but you must use the SAME platform in all 3 stages.

So, hypothetically speaking, if over the course of the three stages, an Intel platform is more optimal for one stage, and an AMD platform is more optimal for another, to be eligible for any of the overall prizes (the 1st through 5th place ones), a participant must pick either Intel or AMD to use for all three stages? And then, hypothetically, would that also mean that you must stick with either a desktop/main stream platform for all three stages, and you can't use a desktop platform (e.g. z490) system for one stage, but then a HEDT platform (e.g. X299) for another stage? I just wanted to clarify the "All participants have to use the same platform for all 3 stages" limitation regarding whether "platform" means more like "Intel platform" or "AMD platform" or if more specifically means "the same specific motherboard with one specific CPU".

And related, for the lucky draw raffle, there would be no such restrictions, just as long as you entered something in each of the three stages, or again, is it that same restriction as what would be used above?

And, just checking in advance, but does the same set of DDR4 have to be used for all three stages as well? I'm not sure if it would necessarily come into play, but if perhaps a 2x4GB kit of Corsair memory worked best for one stage, but a different 2x8GB kit of Corsair memory worked better for another stage, would using the two different kits for different stages be allowed, or would it then disqualify you for those overall 1st through 5th places?

-

1

1

-

-

-

When Intel goes with the big.LITTLE with Alder Lake next gen on the mainstream platform, do we know how that's going to affect frequencies and stuff, as reported by CPU-Z? And/or, how might it be affected next year by these potential rule changes to CPU-Z validation? So, somewhat related to the third question of this poll, if the little cores don't/can't clock as high as the big cores, wouldn't that sort of effectively be like the situation where you're running some cores at lower frequencies (if not disabled entirely)?

-

2

2

-

-

Looks like the changes must have gotten applied a little under 2 weeks ago, since it seems like there had been some submissions for it as recently as Feb 13. Good to know.

-

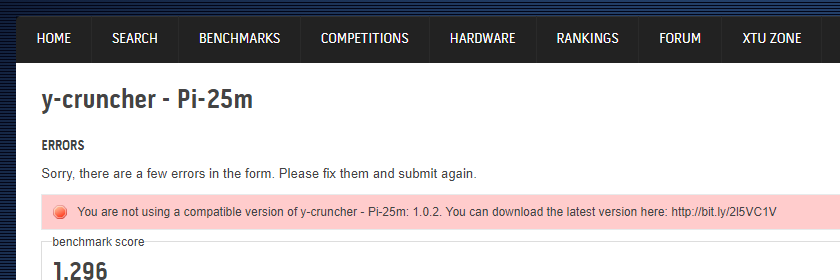

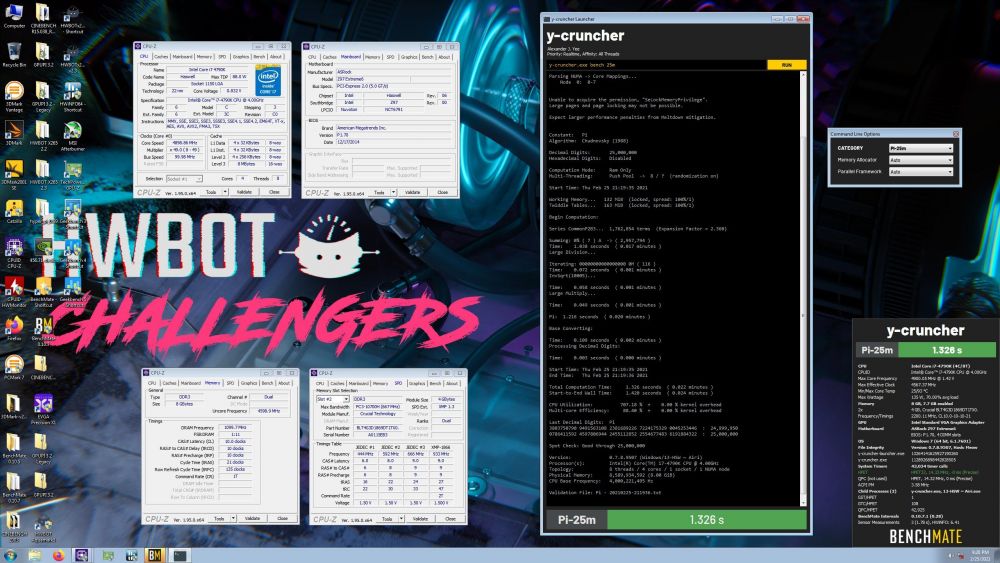

I'm not sure if it's a hwbot submission page issue, or if it's a Benchmate issue, but lately when I try submitting y-cruncher Pi-25m results done with Benchmate 0.10.7.1 (I think I've tried it 2 or 3 times), I've been the following error:

Runs done on the same system for Pi-1b seem to go through just fine (no error about version number). It appears that some of my Pi-25m submissions (from BM 0.10.7.1) were going through fine a few weeks ago, so I'm not sure if maybe something just got messed up on the hwbot end. A similar error message pops up and indicates that hwbot rejected the submission if I submit it directly through the program as well.

I've attached an example set of files from a submission that gets this error.

-

Oops, forgot to change the wallpaper for the competition. I'll re-run the benches.

-

Oops, forgot to change the wallpaper for the competition. I'll re-run the benches.

-

Oops, forgot to change the wallpaper for the competition. I'll re-run the benches.

-

Just checking, but given the LGA 2011/2011-3 and Xeons being allowed, does that mean dual socket systems are allowed, or is it supposed to be limited to single socket?

-

I was just taking a look at a couple of the results files and screenshots I had for some benchmarking I did earlier this week before submitting them, and when I was looking over the rules again, I noticed that they would all have been "invalid" since I didn't have the platform clock enabled (fortunately, I have some Benchmate ones with HPET enabled, so I can submit those, though they weren't quite as good as the ones done from the HWBOT submitter).

Anyway, I noticed mainly that in the y-cruncher HWBOT Submitter, under the "Clock" column, it would list "HPET" if it was enabled. As an example, this submission shows that HPET in that column, but this one and this one do not. (I only referenced those ones, mainly because those were a couple of the scores I was chasing after). Should the sample screenshots be updated to show the HPET in that column?

Poking through a handful of the top scores, it seems like a number of the submissions from the submitter also don't have the platform clock enabled (because it's really easy to forget the rule and not enable it, and heck, even a couple of my submissions are technically missing it too), at least according to that column. It would appear the rule for the HPET being enabled for y-cruncher has been in place for awhile.

-

Oops, I think I'll need to go back and re-run this, since I messed up the screenshot and had a couple of the cpu-z windows partially covered.

-

8 hours ago, Panto911 said:

How what are your settings?

I probably just had the laptop set on the high performance power plan, had the Cinebench task set to be either high or realtime priority, and then ran it a few times (taking screenshots when I got a new high score, with this being the best it got). The 3.2GHz clock speed is probably whatever it just happened to be when I grabbed the screenshot, since 32 doesn't seem like any of the standard boost multipliers for that CPU under load, at least according to Wikipedia. It seems that it should either be at 3.1GHz when all cores are under load, 3.3GHz when 2 or 3 cores are loaded, or 3.5GHz single core boost.

Intel 12th gen non-K OC capable boards / bios versions

in Alder Lake (Z690) & Raptor Lake (Z790) OC

Posted

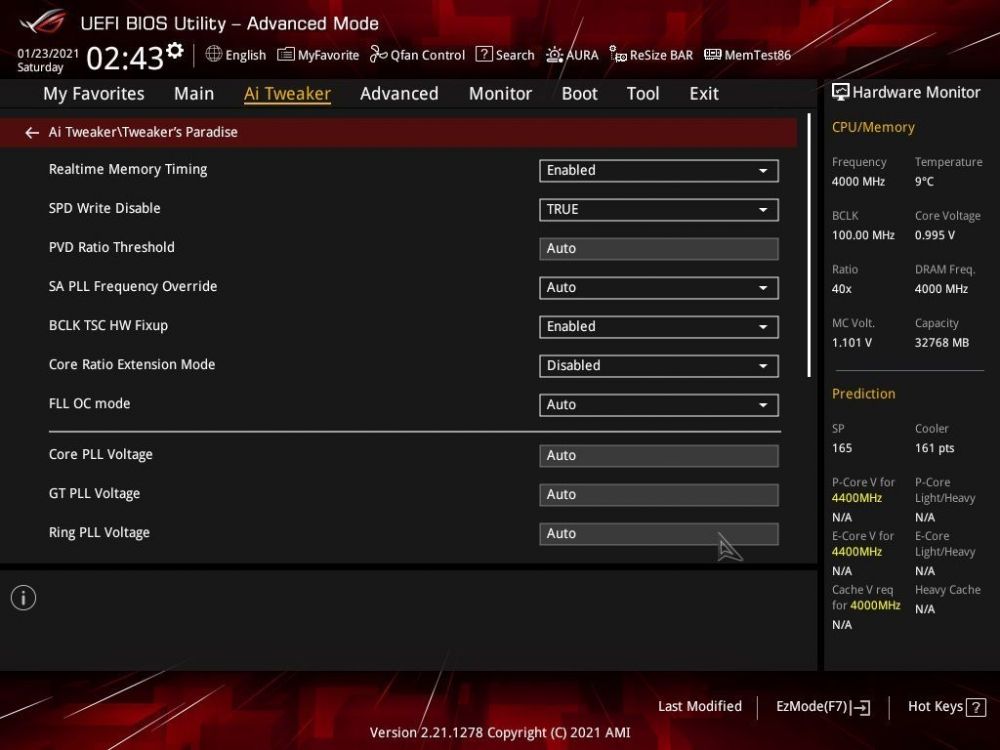

Didn't realize the pictures could be hidden like that. I did some more testing. I did not see any of the BCLK settings on either the TUF Z690-PLUS WIFI D4 or the Strix Z690-A D4. Tested BIOSes 0601, 0807, and 0809 on the TUF (didn't realize there was a 1001 beta as well), and 0707, 0807, and 0901 on the Strix (and again, didn't realize there was a new 1004 beta as well (though Jumper118 in another thread mentioned that the 1001 one does not add it either)).

One thing, which I don't think will necessarily work, but I might try at some point is whether it's possible to set the BCLK setting in the BIOS as a favorite when there's an unlocked CPU in it, and then have it still show up and/or be functional when swapping to the locked CPU. My guess is that it will just get hidden in the favorites menu when the option is not available to use, but still worth a test at some point.