Falkentyne

-

Posts

37 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Blogs

Posts posted by Falkentyne

-

-

Hmm 0705 beta bios for Apex. That must be the test BIOS shamino released to fix some things. Is there one for the Extreme also?

-

With my 10900k, RTL's changed on M12E 2004 (and I assume M12A 2004 too), so what I previously used on all older bioses, 1002, 1003, 070x 060x and so on, 62/62/63/63 //7/7/7/7, for 2x16 GB @ 4400 16/17/37 1.5v, on 2004, when I tried this, the system was wildly unstable, either crashing in BIOS or BSOD/UEFI missing winload error. Had to use 63/64/8/8 now on M12E 2004.

I am assuming (but do not know for sure) that this applies to newer ones too.

On M13E, I used the exact same RAM settings and 63/64/8/8 with 10900k, as I did on M12E 2004, and it was just as stable (just needed 20mv lower bios voltage due to less vdroop/new VRM/intersil at same LLC). No problems. Same ODT's too (80/48/40).

With 11900k RKL and M13E, everything's different. At 4266 16/17/37, some of the tighter terts that worked on 10900k just fine cause a massive drop in speeds, (I think trdrd=5 is bad on this) so those have to be loosened. the ODT's also caused instability, 80/48/40 was no longer stable, but Auto was fine. Either manual RTL's don't work, at least no one has figured out how to make them work. RTL Init no longer works at all. "Round Trip Latency Timing" Training algorithm does work though.

-

Are you guys talking about this?

-

1

1

-

-

They are the same setting.

I've seen several "sub" parameters for that setting with absolutely no documentation on it on some laptops, I think I saw three looking at a MSI laptop BIOS with AMIBCP 5.02.

I only remember a 0, 1 and 2 however. Or perhaps the 'first' one had recommended values of a 0,1,2 on some strange Asus BIOS and the second was like 5,6,7 I honestly don't know or remember. hard to remember bios settings with absolutely no documentation.

Ok I found it.

DLLBwen[0] for 1067 (0...7) -->failsafe: 0, Optimal: 0

DLLBwen[1] for 1333 (0...7) -->failsafe:0, Optimal: 1

DLLBwen[2] for 1600 (0...7) -->failsafe:0, Optimal: 2

DLLBwen[3] for1867 and up (0..7) -->failsafe:0, Optimal: 2

Don't ask me what any of that means.

-

1 hour ago, Mr. Fox said:

Thank you. Great info, as usual. So, what you're saying if I understand correctly, just ignore (even hide) all of the HWiNFO sensors mentioned above because they are meaningless except for "3) Die-sense reading (direct from VRM)" is that correct?

If I disable SpeedStep my multipliers are ignored and the CPU runs at non-turbo only. Not sure if that is user error or what.

Well the other two vcore readings are useless. VR VOUT (and Current IOUT and Power POUT) are the readings you want. You also obviously need Dram, VCCSA, IO and those others.

I have no idea about your speedstep problem. I disable speedstep on every gigabyte bios and I never had an issue like this. I tested X4 briefly and some others.

Maybe you need to enable Turbo boost ratios manually and keep those at auto.

-

1

1

-

-

1 hour ago, Mr. Fox said:

@Falkentyne - this is with the BIOS using fixed voltage set at 1.375V. I am almost thinking the voltage values reported are wrong. Look at how low the temps are. CPU-Z shows a reasonable value similar to what I have set in the BIOS, but HWiNFO and Core Temp both show an insane value. I'd like to ignore the HWiNFO info, but I don't want to kill this CPU if the information is accurate. I am wondering if the SVID is being reported erroneously (which I would normally disable, but don't see an option for that in this BIOS). The behavior is the same with stock BIOS F3, F5 and F6b and now with X4. How could my temps be this good if the voltage was really as high as what Core Temp and HWiNFO say it is? (I seriously doubt the voltage can be that high with the temps this low.)

Here's what it shows in the BIOS.

And, here is what HWiNFO and Core Temp are telling me.

Edit: @Falkentyne - another discovery and question. It is normal for SpeedShift (not SpeedStep) to cause TurboBoost to be disabled if SpeedShift is disabled? Is that something new, or do I need to just return this motherboard and buy something different that actually functions correctly? This was my first-ever Gigabyte product and so far I'm extremely unimpressed with the firmware. I'm used to Asus and EVGA firmware, but I don't know if this nonsense I am seeing affects all Z490 or just this Aorus Master motherboard's firmware.

With that board, please use HWinfo64 and use the VR VOUT field in the VRM section for accurate voltage monitoring. This is the "die-sense" voltage that you may have heard of, and it is extremely accurate. it is the VRM ADC controller value.

**YOU MUST** be using the most current version of hwinfo64. Older versions will not support VR VOUT. VR VOUT only got added because I asked Martin to add support for it, but he had some difficulty accessing the VRM. Shamino (Asus), believe it or not, helped him get it working on a *gigabyte* board. yeah, Ironic, I know.

The vcore you see in CPU-Z is the old typical "Super I/O" voltage reading which is always going to be above or way above what the real vcore is.

In fact, in HWInfo64, there are going to be three vcores:

1) Super I/O reading (ITE 8688E).

2) Socket MLCC reading (ITE 8792E).

3) Die-sense reading (direct from VRM) - Intersil 96269 (I think).

The die-sense reading is the reading you want to use.

Unfortunately, no other programs know about accessing the VRM directly, just hwinfo64.

------

I also do not use speedshift or speedstep. I disable all of that stuff like you told us to do on NBR long ago.

I just disable all power saving and set a multiplier randomly.

Also, one thing I noticed on Gigabyte boards is that the "Turbo velocity boost" multiplier boost seems to be enabled by default regardless of cpu temps. For example, at "stock" operation, the gigabyte boards will turbo to 4.9 ghz at all times, instead of it being 4.9 ghz < 70C and 4.8 ghz at 70C+. I'm not sure if this affects the "2 core" 5.3 ghz boost however.

-

8 hours ago, Mr. Fox said:

@Sparky's__Adventure and @Hicookie - thanks much. I just flashed X4 on my Aorus Master.

Do you guys, or anyone else, have any suggestions on how to control the idle voltage. On my 10900KF the BIOS has voltage set a 1.452 (Fixed) but at idle in Windows it is well above 1.600V. What am I doing wrong?

The Great Mr Fox.

Please use "Fixed" mode, not "override" mode.

Override mode changes the VID to the override value. This is identical to "override mode" on your Clevo laptop, oddly enough. If AC/DC Loadline are not set to "1" (0.01 mOhm) or a low mOhm value, that will cause vcore to rise substantially at load, even more so if LLC calibration is higher than "Standard".

Fixed mode is the "override" you were used to on Z390 desktops. That's actually what you want to use.-

1

1

-

-

4 hours ago, GtiJason said:

uCode or uCode removed ? Been trying dif bios because I have all kinds of issues/bugs. Can't get MemTweakIt to even show timings all I get is "About" tab hit Apply does nothing, hit OK program closes. TimingConfig 4.0.3/4 shows 65535 for every timing. Tried XP and W10 May 2020 update

You need to -uninstall- 4.0.4, not just install 4.0.3.

Uninstall both. Reboot after each. Then install 4.0.3. Reboot. 4.0.3 will then work.

4.0.3 will NOT work if you did NOT uninstall 4.0.4.

This may also fix the memtweakit issue.

-

1

1

-

-

20 minutes ago, sergmann said:

X-BIOS-Versions are for Extreme-OC and XP Support.

Have you tried the last original Version from Giga Homepage?

As I said I've tried every bios version that gets released or leaked. I don't remember if I tried F3 but those old obsolete bioses didn't have working VF points.

The person who told me this bug was fixed in X5 is a Gigabyte engineer but I don't know if he's with the BIOS team.

He's the same person who sent me test bioses when I was helping gigabyte fix the very serious DVID overvoltage bugs on Z390 when switching to fixed mode (T0d and t1D from Z390 Master came from him).

-

5 hours ago, sergmann said:

Who says that X5 Bios will help you when it is not even officially released ?

have you tried last official Bios or do you need one for Extreme-OC?

A gigabyte rep told me that the 1T bug with dual rank dimms should be fixed in X5.

I was not aware that no one had it yet.

The bug is: on both 2018 (october sticker) and 2020 year (February sticker) Gskill 3200 CL14 2x16 GB Trident Z RGB (F4-3200C14-32GTZR) sticks, 1T command rate does not work at XMP on either set. (basic 3200 CL14, all auto timings). it just boot loops and resets after repeated fails at training. The 2020 sticks are much better clockers than the 2018 sticks at 2T.

-

1

1

-

-

X5 Bios is supposed to fix the problem with dual rank and 1T command rate (right now 1T completely fails to work even at stock XMP settings)

3200 CL14-32GTZR Gskill (2x16 GB) + 1T = boot loop.

-

Anyone have the X5 bios for the Z490 Aorus Master?

-

Please doublecheck the TXP setting in *gaming* before all of you use it.

Check your 1% lows!

One user tried using TXP 4, and his benchmark/latency scores went down nicely a few ms, but his 1% lows were worse and he tested it by toggling it on and off each reboot (Gigabyte board so it was already in the BIOS).

So be sure to check it (assuming that some of you guys are actually gamers also).

-

57 minutes ago, marco.is.not.80 said:

Thank you both for taking the time to answer my questions regarding the vrm2.exe tool and my amperage inquiry.

Falkentyne,

you say "So no I can't answer this" but in reality you may not be able to say exactly what the maximum is but certainly we can deduce that as one is approaching 200 amps it might be best to consider not going much further ? Is that fair?

Ok let me put this a completely different way.

Maybe this will explain the awkwardness of what you're trying to ask a video gamer (me) who just plays videogames and who is not a hardware engineer.

Let's say you set 1.45v in BIOS, Loadline calibration level 3. 1.1 mOhms of LLC. This is a perfectly safe setting. And 1.1 mOhms of LLC is intel default vdroop.

Put 245 amps into the cpu that's 1450mv - (245 * 1.1) =1.180v. Clearly that's safe. If you want to "assume" 1.52v is "max VID", ignoring the "1.52v + 200mv" thingy, that would be 1520mv - (245 * 1.1)=1.250v. Now that's an ALL CORE LOAD. All 10 cores.

So now you said 1 core load is 24.5 amps per core. 245 / 10. that makes sense.

Now, what happens if you have a ONE CORE (2 thread) application that is putting 24.5 amps by itself, with NO other cores loaded? Then what???

At that 1.450v, the vdroop and load vcore will be: 1450mv - (24.5 * 1.1) =1.423v ! So according to the above, that "one" core with a 24 amp load on it will be getting a LOAD VCORE of 1.423v!! So that by logic should degrade that core pretty fast, shouldn't it? Because the total amps load is so low that the load vcore is going to be super high.

Unless EACH CORE has its OWN VOLTAGE? But only on HEDT does each core have its own voltage rail because its supplied by VCCIN (1.8v).

Do you see the position you put me in here?? I have absolutely NO idea or clue what I'm talking about anymore or any insider knowledge of how these chips work.

I don't know the answers to these questions. I'm sorry.

-

11 hours ago, marco.is.not.80 said:

OC Family,

I just got my Apex XII and a 10900k (can't believe I found both in stock at the same place at the same time - thumbs up for www.neweggbusiness.com) and have a few questions.

1) Can someone explain to me what exactly the legacy memory mode is all about and do I need to worry about it if I'm just running the stock latest BIOS from the Asus support site? Also, all this talk about A2 only - is this just in regards to the high clock/low latency settings (like 4800 14-13-13 or whatever)?

2) The VRM tool listed in the first post - It works how? I just make a task scheduler event and run it at login!??! What does it do exactly? What should I be looking for when monitoring the changes it makes?

3) I was pulling 190 amps according to hwinfo by doing an overclock of 52 on all cores/0 avx/50 uncore - just how many amps do you think is safe with something like chilled water setup?

4) I can't believe how cool the 10900k runs. Not a question - just a comment. Some of the reviews I've read make me wonder if they even actually used it.

Any insights would be appreciated. Thanks, love reading and learning from you all.

Marco

VRM tweak enables adaptive transient algorithm algorithm, which can reduce vmin by up to 10mv in some loads. It won't do much if your cache ratio is set too high though (cache is far more sensitive to transients than core is).

Max amps is 245 amps, or 24 amps per core, but no one knows what load vcore should not be exceeded at X # of amps, as the 1.52v ceiling is different since the chip can potentially request 1.50v load voltage at max turbo multiplier at all auto settings and max (=1.1 mOhms) AC/DC Loadline and 1.1 mOhms (level 3) loadline calibration now, due to some thing about 1.520v max VID + 200mv=1.720v initial.

Under the old system, you would not want to exceed 1.30v load voltage at 200 amps of current (1520mv - 200 * 1.1 mOhm), but the new system says "1.520v max VID +200mv Serial VID Offset, and not a single person knows why it doesn't just say "1.720v max VID".

On Z390 this could be enabled or disabled via a VRM register (33h). Asus enabled this by default and Gigabyte disabled it by default (and they gave you a way to enable it but it was extremely buggy and unreliable, it only worked half properly (without locking out ALL voltage control afterwards) in test bios T0D on the Z390 Aorus Master as they didn't understand the difference between "SVID Support" and "SVID Offset", so in all OTHER bios versions, enabling SVID Offset disabled voltage control afterwards, and enabling SVID Offset if fixed vcore was at 1.20v in ALL Bios versions would send 0v into the CPU and no post code readout). Seems like everyone has this enabled by default in Z490 now.

Sorry I know nothing more.

So no I can't answer this. It seems like the chip can request "up to" 200mv extra VID whenever it wants under certain conditions. Only Intel can answer this if someone wants to contact them.

-

6 hours ago, speed.fastest said:

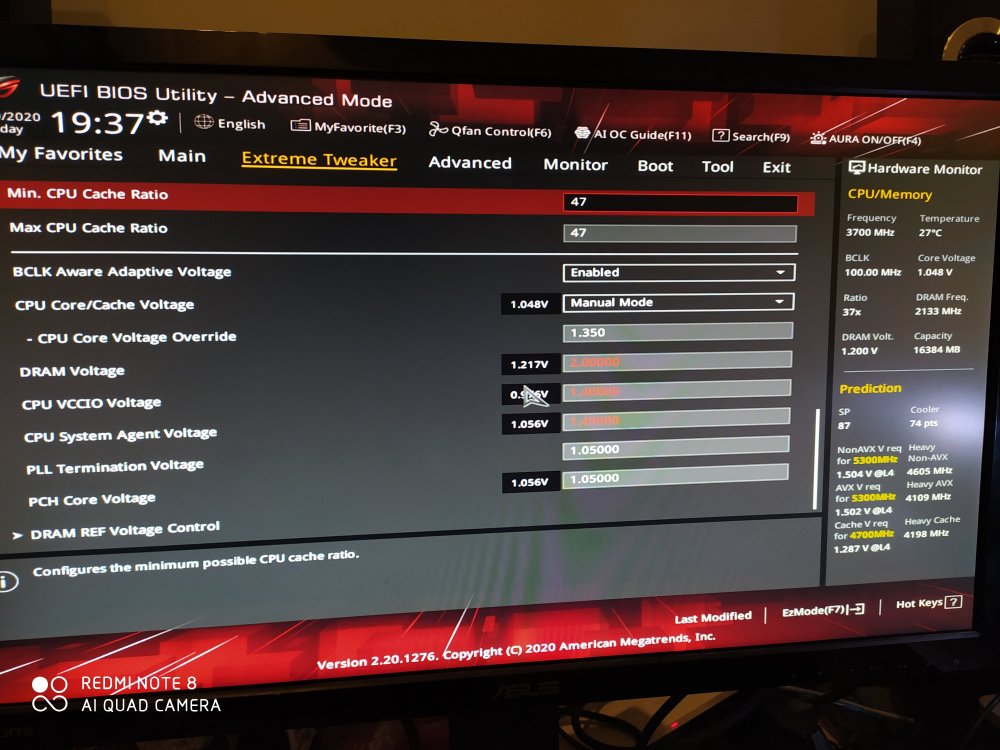

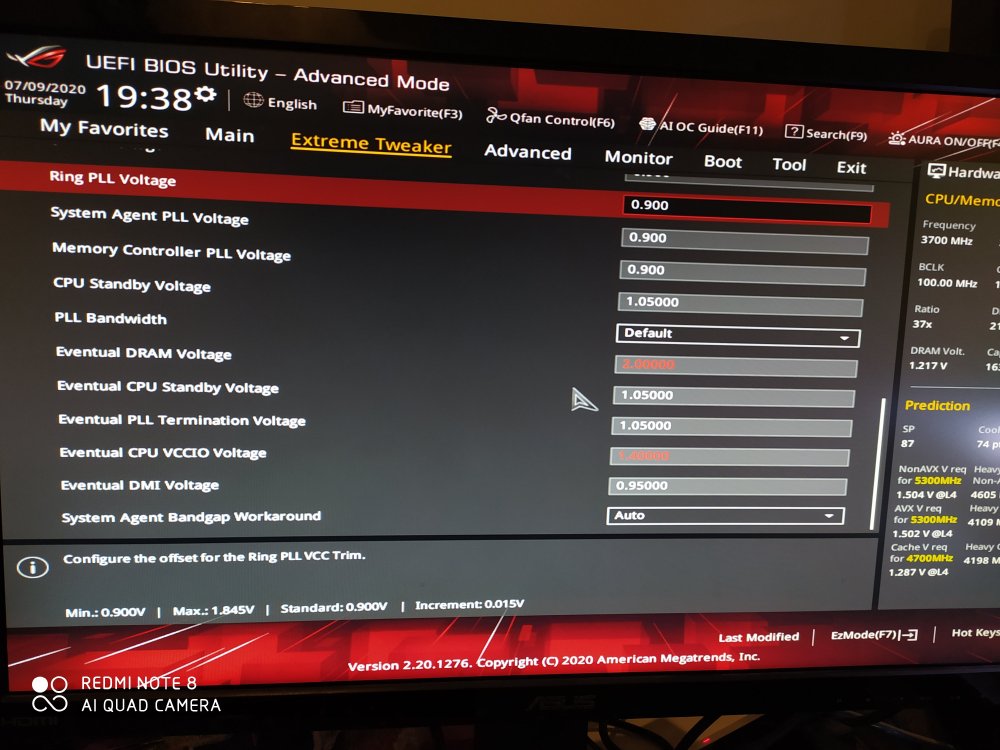

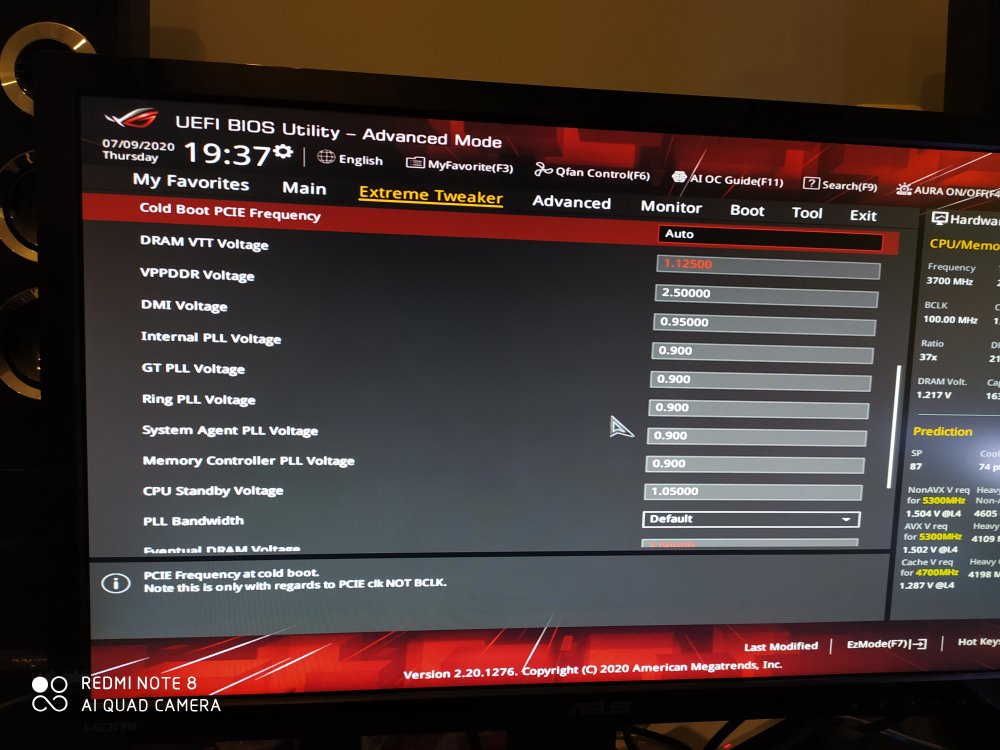

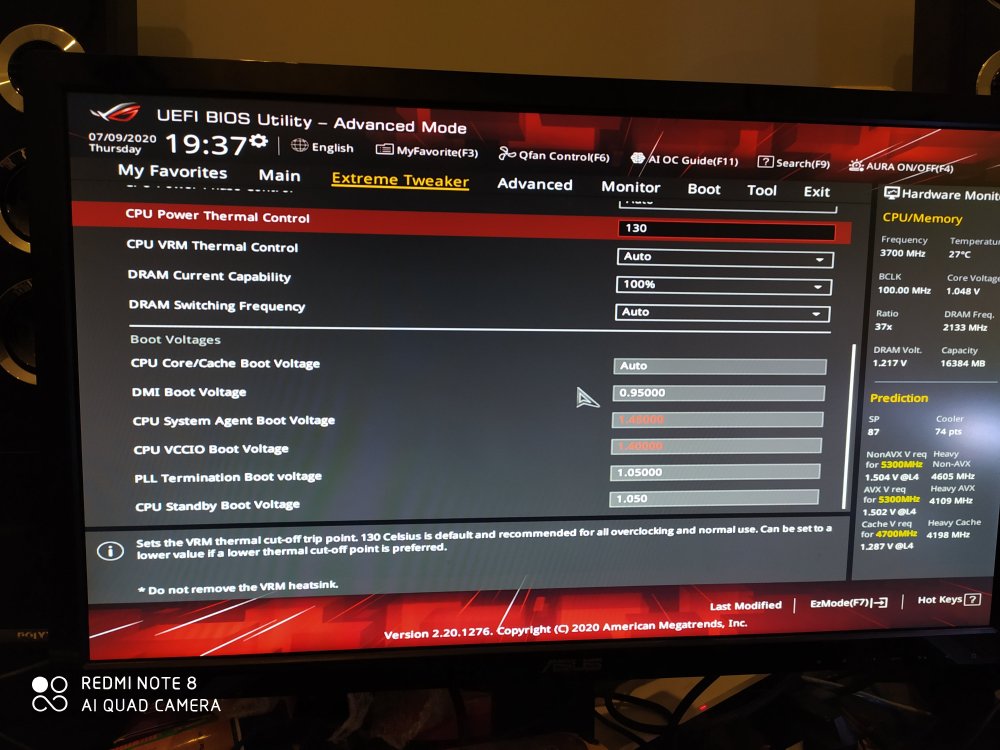

Hello all, at first i know this is XII Apex thread, but i have XII Extreme and have little issue. With LN2 Mode Enabled, i got 80°C CPU Temp at idle. All voltage set manually already like in this photo. BIOS is latest retail 0607, can we have M12E bios with unlocked dram voltage without ln2 mode? Thanks

FYI for Turbo V i can use m12a version on this page without any trouble.

What happens if you set PLL Bandwidth to "Level 1"?

Are you still 80C idle?

-

12 minutes ago, FireKillerGR said:

Work fine here

Getting '404' also from here.

Location: Riverside, California.

Outage?

-

2 hours ago, FireKillerGR said:

you managed to get usb overcurrent already?

How is it possible to get USB Overcurrent in non LN2 mode?

2 hours ago, keeph8n said:I've had it since I received the board.....

On Ln2 and ambient? Open bench setup?

-

6 hours ago, Cancerogeno said:

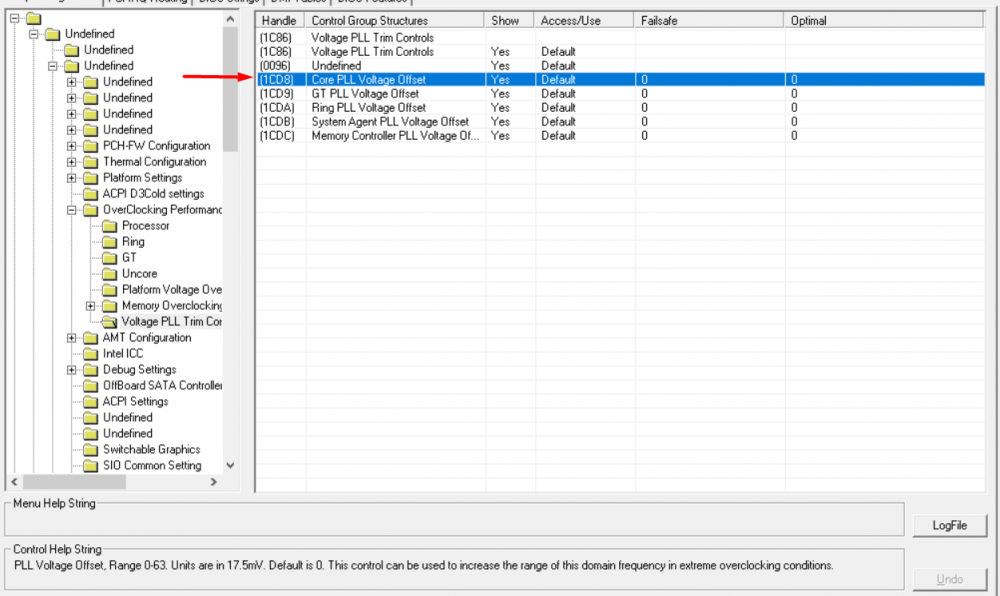

@unityofsaints Found a core PLL offset under "overclocking performance menu" in the bios, it's a hidden menu by default, but theoretically should be unlockable,

that menu is basically in every modern intel based mobo.

Guess it's some kind of menu imposed by intel, i'm not sure if those plls would even work but might be worth a shotThese are already in the Bios. No need to unlock them. It's also HIGHLY questionable if the AMI versions are even linked to anything.

I remember setting CPU PLL Voltage Offset on my MSI GT73VR to something like +600mv through the unlocked AMI Menus and it didn't do a thing. And I have no idea if this was supposed to be "CPU PLL Overvoltage" or CPU PLL Voltage". But the AMI help text looks almost identical to the Gigabyte version of "CPU PLL Overvoltage +mv".

Asrock bios seems to mention that all these "Overvoltage +mv" rails start at 0.9v". I think Elmor said something about all of these "overvoltage" rails being clipped from the CPU PLL voltage.

On my Z390 Gigabyte, going higher than +105mv on CPU PLL Overvoltage +mv on air cooling would just cause a clock watchdog timeout.

MSI calls them "SFR Voltage". Gigabyte calls them "xxx rail PLL Overvoltage +mv". So they're already there.

I think what you may be looking for is "CPU Internal PLL Voltage", which while I don't understand any of this subzero stuff, I think this rail feeds the other rails.

On Z390, CPU Internal PLL Voltage (CPU PLL Voltage) and CPU PLL OC Voltage needed to be at least +150mv apart. Seems like CPU PLL voltage is gone from all boards except Gigabyte Z490 (Where it's still there). CPU PLL Overvoltage +mv is called something else on Asus, and everyone calls it something different.

I don't think Asus or MSI have this setting anymore. Shamino said it's not needed. Maybe it's fed by another rail.

CPU PLL OC Voltage (Asus=PLL Bandwidth, Gigabyte VCC PLL OC Voltage) is needed.

-

1

1

-

-

The VRM tool has been tested well and should be fully safe

For best results, use 500 khz and set VRM options to full phase mode.Works well on both Maximus 12 Extreme and Apex.

On air/water, Realbench 2.56 should get a bigger benefit than Cinebench R20 as the transients are more violent in RB. Feel free to post your results!

-

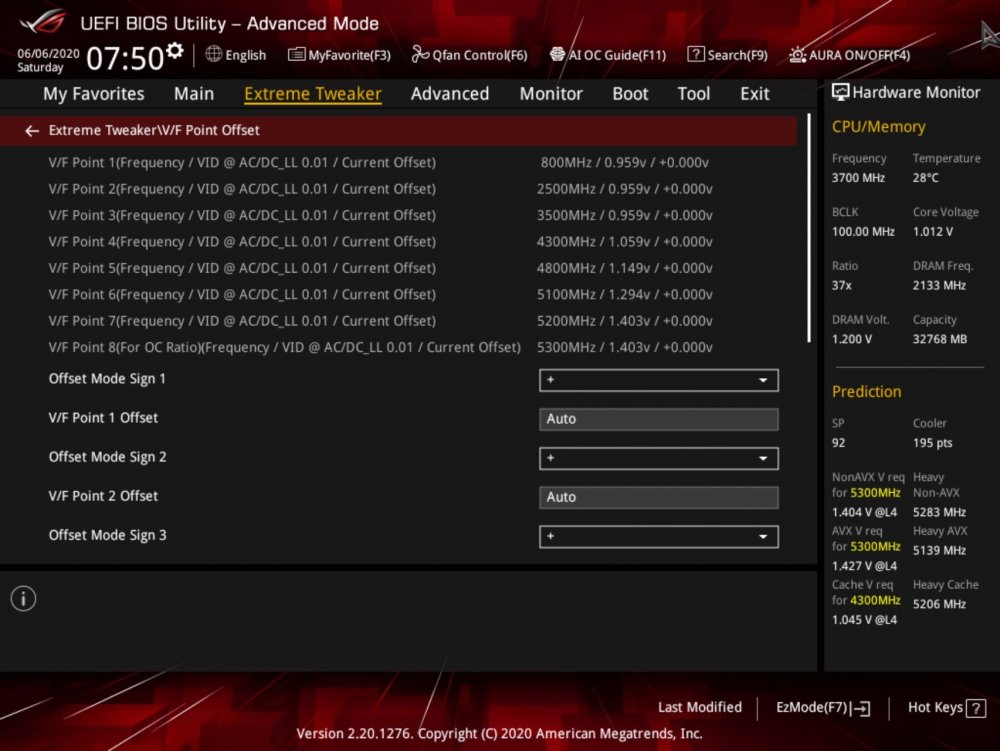

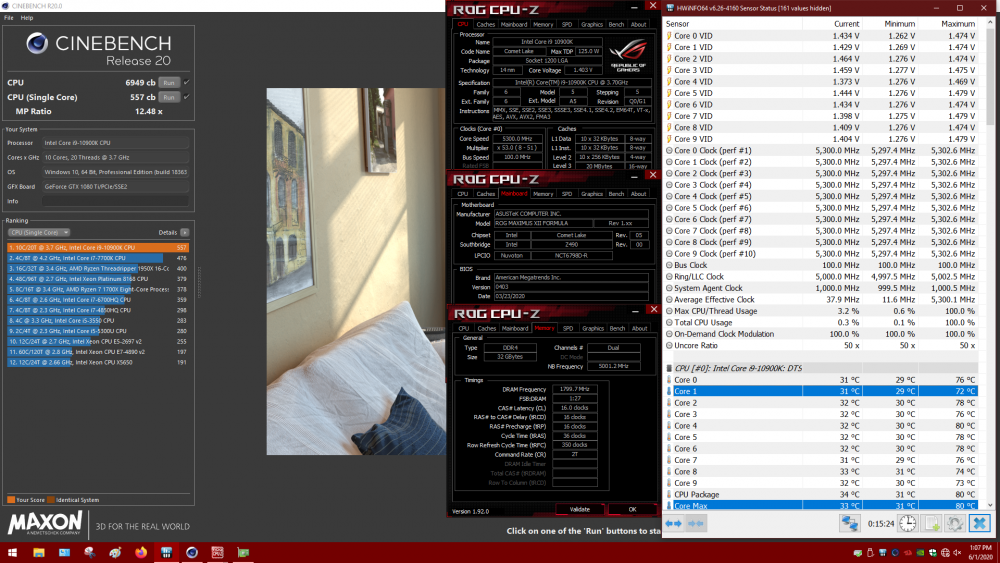

16 hours ago, ThrashZone said:

Need voltage at full load for this to be a valid submission. Idle voltages mean nothing.

Please post a picture of the CB20 during full core load, and the vcore window open in hwinfo64 or CPU_Z so we can see your vcore at full load.

Also your SP is bugged. This is a common problem for people using old bios versions. Your first three VF points are the exact same and too high. This throws off the SP rating too. Your chip could be anywhere between SP88 to SP105.

To fix this you MUST update your BIOS.

1) Update to version 0607.

2) After updating, wait until it says "Press F1 for intel power limits"

3) Press F1, enter BIOS, then power off the system.

4) Clear CMOS with the clear cmos button on the back panel (or jumper/button onboard)

5) boot, wait, then press F1 for intel default power limits. Boot to windows without changing anything in the BIOS.

6) run prime95 small FFT with AVX DISABLED for 5 minutes (with the F1 defaults)

7) reboot to BIOS.

8: Your SP should now be shown correctly. If you want to go back to an old bios after, you can do that after posting a fixed SP/VF points, but if it gets bugged again, it will throw off prediction and AI overclocking when using an old Bios.

-

1

1

-

1

1

-

-

37 minutes ago, ProKoN said:

is it just the little notches (771 mod) or is the pcb dimensions different....no clue on this one

You know you could do a little research before asking something like this, which would take...oh...less than 5 minutes of your time

Answer: Pin count changed, increased

PCB spacing, identical (1151 coolers will work)

Power pinouts: changed, added, re-arranged.

In other words, utterly, 100% completely no.

-

1

1

-

-

The problem here is that some people are posting load voltages during CB R15/R20, while others are posting BIOS set voltages. We need consistency. If people are going to do that, at least do what Nik did above me and show your idle and load voltages so we have something to go on. There is a MASSIVE Difference if you get 1.28v at load during CBR20 @ 5.3 ghz and then claim that's your BIOS voltage....

A Bios voltage of 1.30v at 1.3 ghz is going to droop down to about 1.2v at load with an aggressive LLC...I don't even think a super golden chip can handle that unless it's below ambient.

-

1

1

-

1

1

-

-

6 hours ago, Bullshooter said:

All Chips must be binned under LN2, at the weekend we testet 250 pieces 10900K.

One with the SP 103 can do on AIO 5,3 with 1,295 R20 with AVX offset 0 on LN2 the Chip can do 6890 R15 with 1,625 Vcore

The other Chip SP 64 cant do 5,3 on AIO R20, but the Chip can do 6850 R15 on LN2 with 1,55 Vcore

Interesting. So the worse bin (higher VID, lower SP) chip scaled better on LN2 (less vcore on LN2) than the lower VID chip?

Do most chips follow something close to his?

ROG Maximus XII Apex

in Comet Lake (Z490) & Rocket Lake (Z590) OC

Posted

Doesn't the M12A have two separate ME chips (corresponding to the main and backup bioses the chips are linked to) that can be force flashed by the EVC2 device with the ME firmware? They are separate from the BIOS chips. Just do a "read" on the chips and check the file size then you will know.