-

Posts

1003 -

Joined

-

Last visited

-

Days Won

41

Content Type

Profiles

Forums

Events

Blogs

Posts posted by _mat_

-

-

20 hours ago, Leeghoofd said:

@_mat_ Possible to minimize the font of the Cinebenches and reduce the name to eg something in the screenie. Can that result score screen also be moved around and/or partly covered?(read so not always on top)

We have to think if all info is required to be shown in the score screen, for me the total score and green approval of Benchmate of the checked run is sufficient

Sorry for my crap Paint skilzzzz :p

You really need to think different here. One of the main goals of BenchMate is to be able to make bench life easier by unwinding the rules and removing everything that is no longer necessary. That's easily possible in this case.

So the important questions here are:

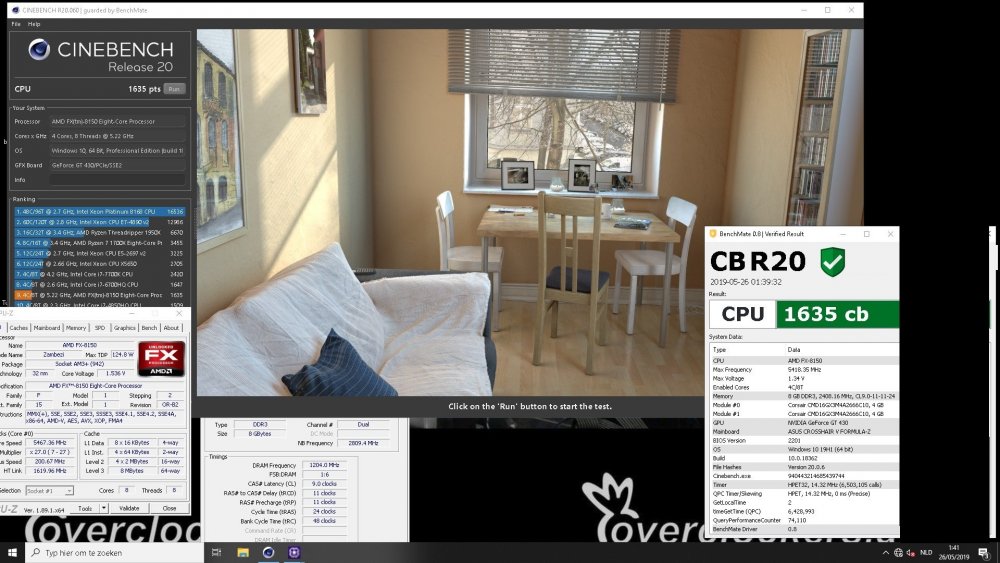

Do we need to see the Cinebench rendering?

No, we actually don't and this shouldn't be the way to moderate Cinebench anyways! There are multiple ways to show a fullscreen image instead of the real benchmark window and desktop. This could be an application that does this or just a desktop wallpaper to trick the screenshot. It's impossible to verify such a cheat and even if I would try, it would turn into a cat and mouse game.But we don't have to go down that road because there are other, much better solutions to check that everything has been rendered correctly. We can check the file hashes of the textures and even better every other file that CB uses for the run. Additionally we can upload a second screenshot that shows the window buffer of the CB window. That's also not the prefered method because it's easy to write into that buffer at any time, but that won't be as easy as a fake wallpaper and if used additionally to the file hash check it adds protection and a nice way (for fellow benchers as well) to inspect only the benchmark window if it's overlapped by anything.

This would completely remove the many problems we have with overlapped benchmark windows. We would end up with less rules for the screenshot, less to moderate and more valid results.Do we need the CPU-Z windows?

As you can see for yourself on this screen they have to be placed very carefully so they don't overlap anything important. You have to play around with the window's order to get it right. Not to mention that it takes time to open two to three CPU-Z windows and fiddle around. I think this was fine when we had no alternative, but we have the possibility to improve the process right now, so why don't we use it?

So the question to ask here is, what information do those CPU-Z windows offer that BenchMate doesn't have already.

First of all the information on CPU-Z is captured at the time the screenshot was made and not during the benchmark run itself. The CPU can be downclocked manually after the run or as it often happens with air and water results it has to downclock because either the CPU gets too hot there was an AVX negative ratio applied and the benchmark used AVX. Same goes for voltages of course, they can differ greatly due to loadline calibration settings, dynamic voltage options and the likes. So the information is outdated and not very reliable.

But there are also things it does offer, that are not implemented in BenchMate. Three things actually:- Bus frequency and ratio (can be added easily to BenchMate)

- A second level of CPU name detection by checking the CPUID family, model and stepping ID (can be done as well in BenchMate, but will take more time)

- The memory's Command Rate (currently not part of the HWiNFO SDK, but I'm sure it can be enabled)

These are the things that need to be discussed here to make our lifes better. Shrinking the BenchMate window to a stoplight is definitely not what I had in mind for creating a new standard of benchmark validation and that's why I'm not going to do that, especially for wrong reasons.

-

I have to check about the GB 4 and licensing, seems like there are some differences I have not anticipated.

Cinebench uses timeGetTime() which is RTC. By enabling HPET in the OS (useplatformclock) you will only activate it for the timer facility called QPC (QueryPerformanceCounter). All other timing functions stay exactly the same, so there is no HPET for Cinebench. The benchmark itself has to take care of timer reliability and Cinebench doesn't care at all.

The reason that Cinebench might be faster is that BenchMate injects the faster and more precise TSC timer into it. The score should also be more stable, RTC is horrible for measuring time periods.

-

Old score in Debug Mode while testing. Forgot to delete it, thanks for the reminder.

-

1

1

-

-

For this competition Leeghoofd stated that all current rules of HWBOT apply.

In the long run my opinion is that this rule does not bring anything to the table. Windows version and result time is specified on the result window. To gain an insight on opened programs/running processes from the taskbar for moderation is dangerous because programs can be hidden from the taskbar, the taskbar could be part of the wallpaper or the process not shown on it in the first place. So decisions should not be based upon it imho.

If necessary we could add a list of all running processes to the moderation meta data of the result. It would be a lot of information, but the snapshot could show tools running that should not be there. To help with the moderation we could distinguish between known and unknown processes and format them in different lists.

-

1 minute ago, _mat_ said:BenchMate 0.8.1Small Bundle: https://bit.ly/2Y2hDW2 (15 MB, GPUPI 3.2 + 3.3, SuperPi, CPU-Z, GPU-Z)Big Bundle: https://bit.ly/2Gf6uHd (385 MB, + GeekBench 3+4, all CBs)Note: These are now self-extracting executables, they should work everywhere!Changelog

- Activated another protection against DLL injection attacks

- Improved font sizes on result dialog, changed font to Calibri

- Bugfix for 2920X/2950X and invalid processor groups

- Bugfix for error dialog, parent window wasn't responding

- Unified DPI scaling for error dialog

- Fixed a bug with Geekbench 3.4.2

- Performance improvement for file hashing of benchmark files

- Smaller SuperPi result message box reminder

Special thanks to @keeph8n for letting me into his troubled Threadripper sys!Please update to the new version for testing!

A note on updating BenchMate in general before I an integrated updater is available:

- You can move your result directory and your config.json into the new installation at any time. They will be recognized on the next launch of BenchMate.

- Please close all benchmarks that were guarded by an old BenchMate version. If you don't want to bother, just reboot!

-

1

1

-

2

2

-

BenchMate 0.8.1Small Bundle: https://bit.ly/2Y2hDW2 (15 MB, GPUPI 3.2 + 3.3, SuperPi, CPU-Z, GPU-Z)Big Bundle: https://bit.ly/2Gf6uHd (385 MB, + GeekBench 3+4, all CBs)Note: These are now self-extracting executables, they should work everywhere!Changelog

- Activated another protection against DLL injection attacks

- Improved font sizes on result dialog, changed font to Calibri

- Bugfix for 2920X/2950X and invalid processor groups

- Bugfix for error dialog, parent window wasn't responding

- Unified DPI scaling for error dialog

- Fixed a bug with Geekbench 3.4.2

- Performance improvement for file hashing of benchmark files

- Smaller SuperPi result message box reminder

Upgrading- You can move your result directory and your config.json into the new installation at any time. They will be recognized on the next launch of BenchMate.

- Please close all benchmarks that were guarded by an old BenchMate version. If you don't want to bother, just reboot!

Known bugs

- Result capturing fails with Geekbench 3.1.5 (use latest version of Geekbench for now)

- SuperPi might not start on some Windows 7 versions ("Failed to guard application")

Special thanks to @keeph8n for letting me into his troubled Threadripper sys!

-

2

2

-

2

2

-

Btw, two small but nice features in BenchMate 0.8:

I changed SuperPi's message box at the end of every run so newbies don't ruin their score. And you can move around the SuperPi window even with the message box active. Always hated that I can't do that!

For Cinebench I've removed the nasty dialogs when quiting the bench. I don't know how many times I've had to click those. No, I don't want to save my score, man!

It's also those little things that we can fix now in legacy benches. If you have another fix like these in mind, just share them and I'm going to look into it.

-

1

1

-

-

Not true at all. The EVGA SR-2 skews RTC on Windows 7 as well. I've fixed this in GPUPI by detecting the mainboard and making HPET mandatory. God knows how many skewed SR-2 scores are on the bot because of that bug, because many other benches use RTC as well.

It's an assumption based on a few tests that RTC is safe on Windows 7. We don't know that for sure. In my tests I've figured out that RTC is very unstable and often skews more than 5 milliseconds per second. That's why BenchMate sometimes injects a TSC timer into a bechmark when HPET is not possible due to performance reasons. Then it tests the skew on every run that involves a TSC timestamp and adds the information to the result dialog in the screenshot.

It's not necessary to assume anything with BenchMate. We are hardware guys, let's measure and analyze.

Also I've stated many other reasons for using it in the posts above. It's not only about timer reliability!

Have you tested it? Isn't it great to upload a result in a matter of seconds not having to bother about screenshots or entering numbers manually into a form?

-

1

1

-

-

Very true, old hardware should be left out of this.

BenchMate has its limits as well. The common denominator is not only Windows 7 but HWiNFO needs to run as well. I think the next weeks will show very clearly where to draw the line. I just don't think it's AMD + Win 10 because BenchMate is so much more than a timer skew check.

-

1

1

-

-

The problem is that no code is necessary to break every legacy benchmark on here. A download and three clicks and you have an undetectable edge over everybody else here.

I am not living in a fairy tale where I think benchmarks or BenchMate can be unbreakable. That's especially true because benchmarking needs security with next to no impact on performance. Otherwise I would have implemented a hypervisor sandbox. In future versions of Windows there will be an Isolated Mode for applications, that will help to offload certain tasks in a secure container. But that can never be used for the benchmark itself, so we are kind of on our own here.

The thing is that we don't need to have a perfectly secure wrapper or benchmarks, even if it would be possible. We just need to raise the bar a bit higher so real, individual effort is needed to cheat.

That aside a unification for submission upload has many other benefits as I stated before. We would have a weapon against the uncertain future of hardware and software, as you said it yourself.

Regarding benchmark integration: Yeah, it is still early times for BenchMate and many other benchmarks can be integrated over next few months. Think of it like that: We don't need any HWBOT integration any more to add a bench, once it's BenchMate compatible, it works for the bot. So soon you will have more benchmarks to choose from than you ever had!

Also there are much more categories possible to use for competitions. This is the state today:

- CB R20, CB 15 + Single Core, CB 11.5 + Single Core

- 3DMark03, 3DMark05, 3DMark06

- Geekbench3 Single + Multi Core

- Geekbench4 Single + Multi Core + Compute

- SuperPi 1M + 32M (all sizes would be possible)

- GPUPI 3.3 1B + 32B GPU, 100M + 1B CPU

- GPUPI 3.2/2.3 (yes, GPUPI 2.3 can be used as well now!)

I'm counting 23 benchmark categories possible that are well-known. Even more if we would add GPUPI 500M or SuperPi 2M for example.

That's just for now!

Regarding Windows 7: As Win 10 was the primary dev OS, it was clear that other OSes will need some work. We will get there, I promise. I'm not going to half-ass this. Windows 7 will work soon and all bugs will be fixed. Just takes time and effort.

As to your question: Sure, it would be possible to integrate only a part of the features, but I see no reason for that now for Windows 7 to 10 on any bench. BenchMate could of course be only a light version wrapper for XP. We can discuss this at a later point. The main reason I would not like to go down this route is to focus on the future and not on the past. If I would get my hand on the SuperPi code for example, I might be able to do a SuperPi 2 that would work better on modern OSes and might help us out of the XP corner. That would take SuperPi truly to the next level to regain meaning in today's world. A step in the right direction!

Thanks for the kind words, Leeg. Very much appreciated!

-

XP is sadly not possible due to missing OS/kernel functionality. See my post here: https://community.hwbot.org/topic/190025-the-official-benchmate-support-thread/page/2/?tab=comments#comment-534605

Vista could work with some additional creative problem solving, but nobody seems to use it nowadays so the effort might be spent better elsewhere.

-

Missing XP support is not something I don't want to do, it's sadly impossible to go even half way of where BenchMate is now security-wise. It misses vital functionality like some very important kernel driver features that I need to secure benchmarks as well as BenchMate itself. Most functionality is Vista+, which was a big step in the right direction for Windows.

Windows 7 support alone was hard enough to achieve and took a lot of time. Sometimes I had to find very creative ways or code features myself so it's on par with the Windows 10 version.

Fun fact: If BenchMate would only support Windows 10 and onwards you could have started benchmarks from your desktop and they would have been safe. Not possible in any prior version of Windows though so I had to settle for integrated launches.

A big problem I see with BenchMate integration into HWBOT is, that HWBOT is not ready yet to go all the way. There are some important functions missing to bring the full concept to life. For example:

- Competitions can't be integrated into BenchMate (or anything else for that matter). According to @richba5tard on a github ticket the query API was written by a temp and never finished. At its current state it only returns how many competitions are found but no results. Same for users, benchmarks you name it.

- There needs to be a full file hash check of every important benchmark file on the server-side. It's an important part of validation. For now I have settled with showing hash numbers of some of the files that have to be compared manually. Something I really would like to get rid of.

- Important information of the result dialog is missing on HWBOT. Windows version, benchmark version, BenchMate driver and client version info, timer information, BIOS versions, enabled CPU cores and threads and so on. And I have much more things in mind here, I'm talking graphs other analysis tools that would help to understand scores better.

- The submission upload has many problems and is also missing important functionality. Authentication is done with plain text passwords, Facebook/Google authentication seems to be not supported (so whoever made an account with these, can't upload scores), auth tokens have to be inserted into the encrypted data file, submission comments are missing, multiple screenshot upload would be necessary, ...

- BenchMate needs some version control feature so you can't upload with old clients if a bug is found.

That's just from the top of my head. So yeah, it's a good question if HWBOT can/is willing to go forward and be ready for the future. If you believe it or not, I actually don't want to develop another platform if it can be helped. But I also don't want to settle with things here just because "they always were that way and that's fine". I want to see competitive overclocking on the same level of credibility as Formula 1, skiing or pro gaming and I don't see that happening with things as they are here.

PS: i really don't want to talk bad about the moderators here. They are doing a fine job with the tools at hand. I just think that the right tools are not there yet. My very ambitious goal would be to have 99% of the results not needing any moderation, so the rest can be thoroughly examined manually with enough data to make the best decision.

-

To spoil it for everybody here in the community: Nearly every benchmark on HWBOT is easily cheatable by just downloading a single, publicly available tool. Apart from that there are a lot of other attack vectors (some very easy to find and abuse, some harder), that threaten the already non-existing credibility of screenshot benchmark results. I'd also like to clarify that GPUPI is not secure in any way in its current state. In retrospective it's a pile of shit, although sadly miles ahead of other benchmarks like wPrime or SuperPi.

Leaving aside the fact that benchmark security is in a horrible state, the same can be said for timer reliability. Yeah, there is an RTC and TSC bug for Windows 8+ on any CPU that is not Intel Skylake+. This bug has definitely an impact on some benchmarks while others might have fixed this if the code is bug free and future-proof. I assure you from my experience of supporting GPUPI for the last five years that neither is true. It's also a fact that even well-versed overclockers have a hard time to find the right choice of OS to produce valid results. And I completely get it, it's a nightmare.

Last but not least let's keep in mind that all of the above just describes our current situation. What about the future? When will the next timer produce bugged results? Do we really want to wait for the next undetectable cheat, incompatibility or bug to ruin a beloved benchmark? It's going to happen sooner or later, the question is if we want to do something about it.

So to get to the point: No, I don't think that the way HWBOT valiates results works at all.

Running easily cheatable benchmarks with possibly unreliable timers does not work.

Uploading an easily editable screenshot by hand that shows CPU-Z and GPU-Z windows that were opened after the actual run does not work.

Filling in submission data into a form by hand does not work.I agree with you that it's too early for BenchMate to be used right now. It needs time and room for improvement. But I completely disagree that it should only be used for AMD for all the reasons stated above.

About wPrime, I think it's a bad idea to let such an important decision like the integration of BenchMate depend on an old benchmark, that's neither maintained nor bug-free or actually very special at all.

-

Another thought on the topic as a whole: I think it's important that we keep an open mind here and try to find the best way to verify and moderate benchmark results. Not try to force the new way to adopt to the old way!

The need to see the whole benchmark window is a very good example. This is a requirement that could be easily removed by automation now. BenchMate could make a second "screenshot" of the benchmark window alone (without any overlapped windows of course) and upload it together with the full screenshot. So two screens would be visible on the result page by default and show exactly what's necessary.

BUT this would require a small update of HWBOT to allow uploading of multiple screens with one result file.

@richba5tard Do you think that's possible?

-

-

The result dialog is as big as it needs to be, it adjusts its size to the content. "CINEBENCH R11.5" is just huge as a heading.

I can make the font smaller of course. But we have to keep in mind, that the goal is to see what is going on as fast as possible.

Edit: @Leeghoofd I see it now, the font seems to be bigger on Windows 8! Ok, I will have a look at it!

-

I have reuploaded the new version with more compatible zip archives. Sadly we are back to 550 MB for the big bundle.

48 minutes ago, keeph8n said:I used 7zip as suggested and worked flawlessly. I still have the same issue with this version as what I was with the 0.7.1 release.

Well, please use the bug report tool as suggested above and we are going to have a good look at that.

-

On the same file, GPU-Z.exe? Maybe Windows Defender or another Antivirus tool hating the new GPU-Z version 2.22?

-

Looks more like a disk error. Try 7-Zip pls.

-

You might have caught a reupload. Had to fix something. Please redownload and check again.

I've also used LMZA as compression algorithm this time, saved 120 MB on the big bundle. Tested it with Win 10's integrated zip extractor and it worked. An old tool might stumble though.

-

@Leeghoofd I've added all categories as beta benchmarks now. Please adjust the competition, so everyone can directly submit to HWBOT.

Good news, everyboy! BenchMate 0.8 is finally out!

Small Bundle: https://www.overclockers.at/downloads/projects/BenchMate 0.8.zip (23 MB)

Big Bundle: https://www.overclockers.at/downloads/projects/BenchMate 0.8-bundle.zip (436 MB)Few quick notes:

- Every CPU benchmark category that's on HWBOT can now be submitted online into a beta benchmark category named "BENCHMARK XY with BenchMate".

- 3DMark03, 3DMark05 and 3DMark06 are supported but have to be installed by yourself and added with the [ + ] button or just started while BenchMate is running. The benchmark will be automatically added to the list when it's supported. That works for all benchmarks btw.

-

There is now a Bug Report tool. Please use it!

If BenchMate fails to start, you can open "BugReport.exe" manually for submission.

Have fun!

I am going to start an announcement thread next, where I'll go into details what BenchMate does. But feel free to test away!

-

3 hours ago, flanker said:

Mat, is 0.8 version ready to public now? Thanks

Last breaths before it is. Please be patient!

-

2

2

-

-

3 hours ago, cbjaust said:

Re: Skew, I found that CINEBENCH R15 would get a skew error warning quite a bit.

That's exactly why I rewrote a huge chunk of code. CINEBENCH uses timerGetTime() which internally uses RTC. That's a very bad choice, especially nowadays where there are other, much more precise options. So what happens here is that timeGetTime() is sometimes up to 5 ms inprecise PER SECOND and that triggers the skew detection of this timer.

What I do normally for all well written benchmarks is, that I inject my hardware implementation of the HPET timer (Note: Does not have to be enabled in Windows, only via BIOS!) and completely emulate these timer calls. But that's sadly not possible with CINEBENCH because it calls this timer facility about a million times in 20 seconds. The driver emulation would result in a performance impact (small but it's there), because the HPET timestamp has to be queried via a driver IOCTL while timeGetTime() makes use of an OS syscall. Nothing is faster than that + RTC is faster than HPET as well.

To sum that up: Don't call ANY timer facility hundreds of thousands of times. There is no reason for that if the calculation code is independent from the GUI. Also NEVER use RTC ever. You will produce a slot machine where several of the same benchmark runs will end up with different results. It's horrible to tweak the last few milliseconds out of a bench like that.

Btw, even in CB R20 the timer code was not updated. It's kind of ridiculous that I have to fix the mistakes of other benchmark developers. :p

So what I do now in BenchMate 0.8 is that I inject QPC into the CINEBENCH (which should be the CPU's Timestamp Counter, TSC) and detect skewing on those timestamp. Much more reliable and no false positives so far!

-

2

2

-

-

40 minutes ago, marco.is.not.80 said:

Soon we will start hearing questions like "Do you have the working version of Windows 10 or the older version which stopped working?" ?

I don't really get it. All Windows 10 versions will work as soon as the driver is signed by Microsoft. It's just another bullshit "security" restriction that they can't really enforce, because ... it's bullshit.

-

1

1

-

_mat_ - Core i9 9900K @ 4703.4MHz - 34590 points Geekbench4 - Single Core with BenchMate

in Result Discussions

Posted

Guess it's just another upload of the same result. The debug version can do that to make my life easier when testing.