-

Posts

1003 -

Joined

-

Last visited

-

Days Won

41

Content Type

Profiles

Forums

Events

Blogs

Posts posted by _mat_

-

-

1 hour ago, yosarianilives said:

I'd be good with ditching all geek on the bot but some people may not like their work for naught. IMHO if we keep geek we keep all geek, kinda like how we have every 3dmark.

What about cutting benches in general only after the season ends? That would hurt a little bit less at least.

-

8 minutes ago, yosarianilives said:

Presumably it could be changed to allow submission the same as the other geeks or are we dropping geek all together.

We could do that and have another benchmark that can't be used on Windows 10 on AMD and Pre-Skylake. I'd rather not reward the "legal action threatening, not caring about the community and oblivious to obvious timer problems" behaviour shown by Geekbench's developer. We should really focus on benchmarks made by developers that give a fuck about overclocking. We will definitely not run out of benchmarks too soon.

-

@laine, thank you for reporting these problems.

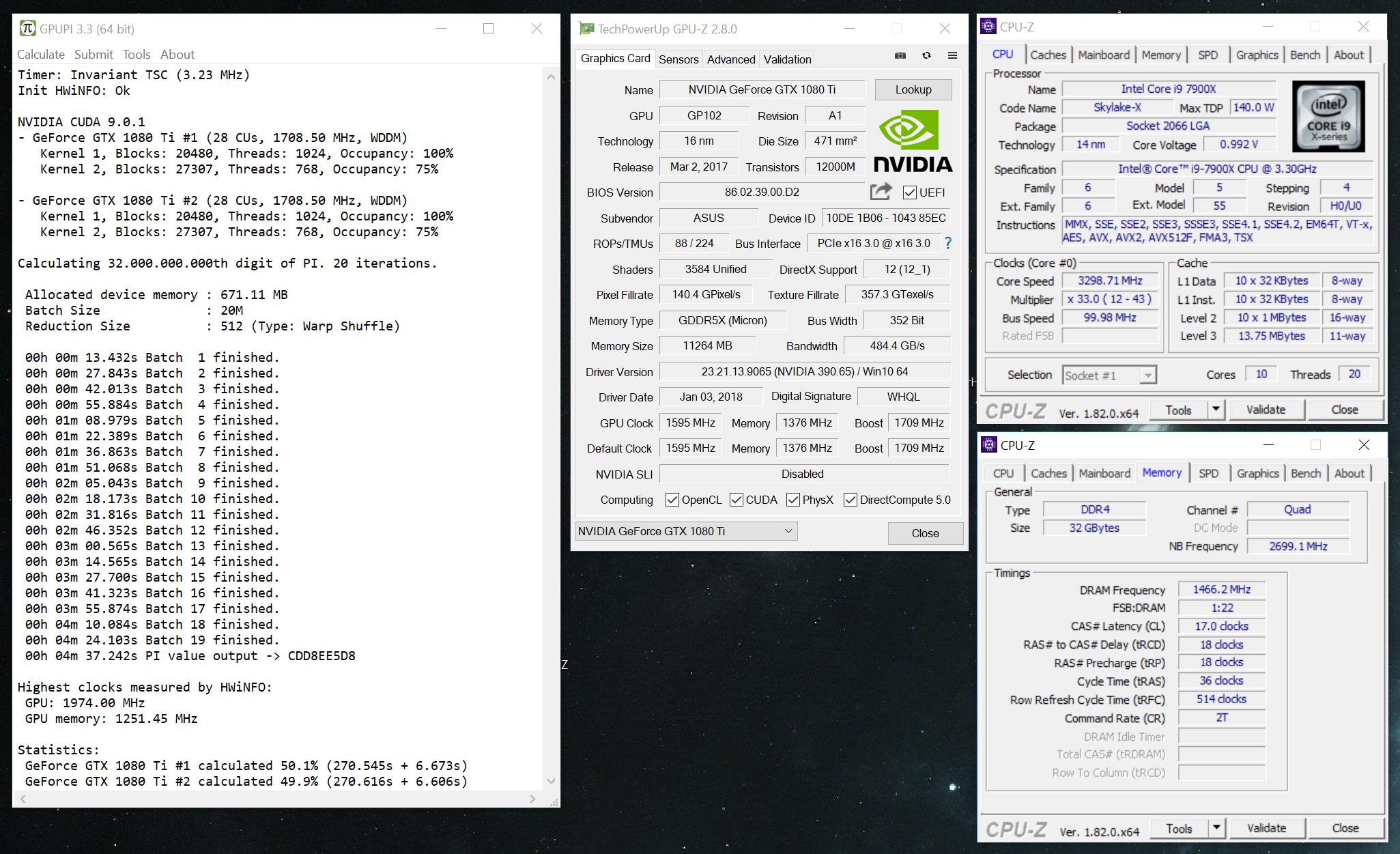

32M on Intel iGPU: Have you tried smaller batch sizes? More than 500 MB of system memory might be more than Intel's OpenCL implementation can handle. It might be a driver problem as well, I will look into it. The Intel GPU OpenCL drivers are a bit nasty.

500M results: This is not caused by system instability, but rather a bug in GPUPI. The validation seems to be messed for some reason. Please use 100M or 1B, they should work fine.

-

Using the latest version of BenchMate is recommended though. But doesn't change the validity of the result of course. :)

-

Thanks, I've fixed it and it shows up in the right category now.

I wouldn't bench it though, I am going to remove the Geekbench 5 categories as soon as BenchMate 0.10 is released.

You can find more information about our great history with Geekbench here:-

1

1

-

-

42 minutes ago, Leeghoofd said:

don't block the rendered scene of CB R15... Benchmate will soon test the integrity of the rendered file, but for now it has to be as visible aspossible. No need for GPUZ, this is a 2D score so you need to show CPUZ CPU and memory tab!

That's already implemented with version 0.9.x. You can verify that there has been no changes of resources that are used by CINEBENCH by having a look at the "Textures/Models" row in the result dialog. 196...073 is correct for R15. If one bit is out of order there would be a different number.

BenchMate 0.10 improves this feature by showing an invalid score when the Textures/Models file integrity hash is out of order. That's not really good practice because I'd rather like to check these values based on the benchmark version that's used, but that would need to be implemented on the server-side (HWBOT). That will take some time as you know. Step by step.

@mikebik The score looks good now. Be sure that the result dialog is on the screenshot. That's the best we can do right now without direct submission to the appropriate categories. As leeghoofd said this is in the works with the devs of HWBOT.

-

2

2

-

-

Great to hear that it works now.

-

I guess that's due to your Windows install not allowing a driver not signed by Microsoft. You can either enable test signing (bcdedit /set testsigning on + reboot) or wait for BenchMate 0.10, which has the driver signed appropriately.

But if you drop a quick bug report, I can check for sure and get back to you.

-

2 hours ago, Splave said:

benchmate would say guarded by benchmate in the title of the program so it doesnt seem you are running benchmate at all?

You are right, these scores were not made with BenchMate. I can't even see it open in the taskbar.

What happened here? Would like to get your feedback to make BenchMate more user-friendly. It should be as intuitive as possible.

-

2 hours ago, Alex@ro said:

you don't know if the result was good or not, just delete it anyway.

Btw, i have this thought now, the user does not have to proove he is innocent, the prosecutor need to proove that he is guilty.

This shouldn't have been a surprise at all. The rules clearly state that AMD can't be benched on Windows 8 and 10 due to the system clock interrupt being directly bound to the reference clock. It's an architectural error that has surfaced with Windows 8 and still exists. To make matters worse, a moderator can't prove anything solely based on a screenshot. Especially not a skewed timer. While "innocent until proven guilty" is a great thing in our justice systems, it can't be applied here. There is simply not enough evidence to enforce it unless Leeghoofd and his team can raid your bench place at any time when a score's in doubt.

We all knew this day would come and that it will be painful. It sucks but it's necessary to keep up the bot's credibility and actually keep it from dying. There are enough sites with shitty results online that don't care a bit about an even playing field. Try GeekBench for example, they officially don't give a single fuck and frankly users shouldn't give one about their scores there either. It's a prime example of lots of data doesn't mean anything anymore. I do have hope that the bot doesn't end up like that. An even playing field is what makes this fun, untrustworthy/unbeatable scores kill it.

-

3

3

-

-

The rules are pretty clear on the topic. Use Windows 7 or lower or your benchmark can't receive any points. BenchMate is the solution we are working on so users like yourself can bench with Windows 10. It's an uphill battle but we're getting there! BenchMate 0.10 will be released in the next few days and will feature new benchmarks, lots of bugfixes, some built-in tweaks and other options as well as an auto-updater to painlessly introduce new updates and additional benchmarks in the future.

As for your reported posts: Please use the "Report Submission" button on the score page's top right, so a moderator will have a look at them.

-

2

2

-

-

21 minutes ago, Matsglobetrotter said:

Thanks for very good explanation and for taking on the challenge to actually make an even playing field without dubious clocks. Indeed we cant assume shit. Then ofcourse it does not help that some Motherboard manufacturer create pause button to freeze a run so parameters can be changed mid way in a benchmark run....

I doubt that they can freeze the HPET crystal so I'm pretty sure that will result in an invalid run. Needs testing though.

-

BenchMate uses HPET as well as the ACPI PM Timer to monitor the benchmark results. It's also good to know that there are three methods that BenchMate uses to validate any timer measurements:

- The HPET timer is injected into the benchmark, so every timer call that happens will give a very precise HPET timestamp. That's used for SuperPi and GPUPI for example. Results will also be more stable for the reasons you stated above. Any runtime is compared with other timers in the system as well. But this method only works if the timer calls are used as they should: A few dozen times during a reasonable runtime, not a million times per second. In that case HPET would be too slow and would hold the score back.

- The original timer is monitored by BenchMate because it is used too often that a very fast timer is necessary to stay competitive. The original timer's reliability is ensured by comparing runtimes with HPET and ACPI PM timer. If the benchmark chooses a very bad timer (like the very jumpy timeGetTime()) it is exchanged with the more stable QPC timer (which relies on the CPU's TSC). This used for CINEBENCH and 3DMark03, 05 and 06 for example.

Both methods have to be extremely precise to produce a validated score with BenchMate. Each timer has to be 99.99% and at least 10 milliseconds accurate to the other time sources that are measured; that's independent of the length of time that's measured, so all runs are equal. As you can see there's not a lot of room for error here and it's not necessary. If something is wrong, it will clearly show up as invalid.

What's important here is that we need to stop assuming things in regards to timer reliabilty. "AMD is safe on Windows 7" is not necessarily true at all, because most of the time we don't know the details of the system components involved and the way the OS reacts to them. The truth is that Windows chooses the default system timer by its own rules and these are not made for benchmarking. Microsoft cares about mainstream use and performance, everything else is better chosen by the user, who should have a clue what he wants to do with the system (benchmark it). So there is the option to switch to other timing methods for the system timer. Or as we know it: bcdedit /set useplatformclock yes

Does it say HPET in there? No, it says "platformclock" which might be HPET, might be ACPI PM timer, might even be RTC or PIT. It's what Windows deems to be right for a time source that's available by the platform (as in not in the CPU, I guess).

If that's not enough to think about, here is a new fact: There are also platforms out there where HPET is emulated by another timer source, that might be unreliable due to skewing.To sum this rant up in one sentence: We can't assume shit, we have to measure to be sure.

-

2

2

-

1 hour ago, ADVenturePO said:

I wanted to upload them on my site for quite a while, so I've finally done that. You can find everything you need here:

https://www.overclockers.at/news/gpupi-international-support-thread

-

2

2

-

-

@ADVenturePO Your error states that you don't have any OpenCL installations available, that support OpenCL 2.0. That's normally only the case if you have a very old setup (not your problem) or only an old OpenCL version available like the preinstalled one in Windows.

So the solution to your problem is to install the appropriate CPU OpenCL driver from AMD. They are not installed with the graphics card driver, these are separate from the Adrenalin drivers for example. As @StingerYar stated, you should use the AMD APP SDK in its latest version. You can try older AMD APP SDK versions as well, they might give better performance (APP SDK 2.9 provides you with OpenCL 1.2 support which might be faster).

Btw, it's good to know that you can install as many OpenCL versions in parallel as you want. Each one of them should show up in GPUPI.

@StingerYar This sounds like a bug with the GPU drivers. They should install their OpenCL GPU version next to any other OpenCL versions, but it seems like they do not.

Check the following registry key: HKEY_LOCAL_MACHINE\SOFTWARE\Khronos\OpenCL\Vendors

It should list all available OpenCLL DLLs on the system. It might be possible that the name of GPU version is the same as the CPU version. In that case add the full path to the AMD APP SDK version to this list.

Last but not least, this is not a VC Redistributable problem! This would be something in the line of "msvcr140.dll is missing". GPUPI 4 will come with VC compiled inside. This is actually not prefered by Microsoft, but they don't seem to be capable to do this right, so I don't have any other choice as it makes my products suck more.

My latest works like BenchMate are already compiled with the VC runtime inside, hence their huge executable sizes.

-

1

1

-

-

11 minutes ago, Digg_de said:

The best is no GPUPI Stage! Worst Benchmark here on HWBot.. sry.

Without any arguments your feedback is useless. Not sorry.

You are welcome to add some constructive feedback though, it might change things for the better in the future.-

1

1

-

1

1

-

-

As the new 3DMarks are separated in workloads, where each test and sometimes subtest got their own executable, it's necessary to check the LOD Bias setting via NVAPI before (or during) each run inside the workload executable. You can't change it in between as far as I'm aware. As soon as the executable is loaded, the NVIDIA driver settings seem to be locked it.

There might be some way with releasing and reinitializing the necessary Direct3D interfaces, but that would be a lot of work. And as soon as you can do something like that, it's a lot easier to just piggyback the settings of the workload, a plain JSON passed to the workload executable as a "locked" file. That was the only way I've found to fuck with LOD in the new 3DMarks.

To say something ontopic as well: It has to be considered, that points in Port Royale are currently rather expensive. I heard that @Splave sold a testicle to compete with the YouTubers. They now call him the Lance Armstrong of overclocking, cause he's still going strong with one nut. Might be an urban legend though.

-

7

7

-

-

4 hours ago, Splave said:

had 3 instances open 2 with 32B and 1with 1B so I could start 32B soon as 1B done could manage about -75 loaded this way

ColdbugMate for GPUs incoming?

-

1

1

-

-

Great stuff!

I only had a late part of an evening again, but it didn't take very long to remove the obfuscation of XTU to have about 90% of the code readable and ready for debugging. I also removed the anti tampering check with switching a single IL instruction. What does that mean exactly? Well, I basically have my own custom XTU version and can make my own scores.

That said, the new version is better when it comes to Anti Tampering. Just not by a lot. The problem here is C#. Like Java it's the wrong tool for the job.

@0.0 I guess your wrapper is for the XTU1 score only, right? The XTU2 benchmark option is implemented by calling native code from XtuBenchmark.dll and registering a callback to fetch the score. I didn't go into it in all its depth, but this is a lot harder than to wrap the p95bench.exe.

-

8 minutes ago, unityofsaints said:

There's a little operating system called Windows 7 that we've been using for Geekbench 3 since its release in 2013...

Not necessary to be rude here. This is a dicussion to get the most fun out of the comp and I'm trying to give my two cents. And you might have missed the point of my post although you quoted it:

3 hours ago, _mat_ said:Geekbench is faster on Windows 10 (since 1903), if I recall it correctly.

I don't know how much fun it is to not be able to beat the Win 10 scores of others.

-

1

1

-

-

Is Geekbench 3 on AMD really a good idea? BenchMate is not going to support it with its next version, the old version

won'tcan't be available for download as well soon. And Geekbench is faster on Windows 10 (since 1903), if I recall it correctly.-

1

1

-

-

10 hours ago, l0ud_sil3nc3 said:

Same thing popping up over here even on the X570 Aqua which has no clockgen or any way to alter bclk.

Interesting. I will have some time tomorrow to finish BenchMate 0.10. And even if I can't finish it tomorrow, I can send you and the Splaver a test version to see if this is fixed now. Would help me a lot!

-

1

1

-

1

1

-

-

1 hour ago, Leeghoofd said:

Maybe we can give it a spin in the PRO OC, so the big boys can mess with it and see if they can break, trick it... it that an option ?

In my opinion both is needed. A theoretical research to check what the benchmark does (or doesn't do) and a small competition to see if it scales well, there are no oddities under extreme conditions and to figure out if it's fun to bench.

-

2

2

-

-

I only had a quick look, because I'm short on time currently. But I can already say that there are security fixes in there, so it's actually better.

The bench itself is better as well, it fully loads an 9900K during the whole test.

Regarding timers things don't look very bright. I'm pretty sure that there is no mitigation for Pre-Skylake in there, so timers will skew on older systems.

I will have an in-depth look soon.

So I guess the goal is to give XTU points again if it does alright?

GPUPI - SuperPI on the GPU

in Benchmark software

Posted

Just as I expected. The bigger reductions use lots of local shared memory that smaller GPUs normally can't handle. The kernel should actually fail but it seems like the Intel driver just returns nonsense. Maybe I can check if there is enough memory available beforehand to avoid the confusing error that GPUPI gives. That should be reserved for stability issues.

Thanks for your feedback!

I won't be touching the old ATI Stream stuff with a stick. I've worked with it many years ago and it's really really buggy and also very slow. Maybe it was just me, but I thought that GPUPI won't be possible at that time and gave up. As for DirectX and OpenGL compute shaders, that would be a possible way to enable support for these two iGPUs. I've put it on my list, right below Vulkan compute support, which is something I wanted to look into for some time now.