-

Posts

1000 -

Joined

-

Last visited

-

Days Won

41

Content Type

Profiles

Forums

Events

Blogs

Everything posted by _mat_

-

Please have a little patience until version 0.8 is ready. It's very close, testing just revealed a minor problem that will take a few additional hours. I really want the first public release to be solid! Feel free to test with 0.7.1 though if you have the time. @marco.is.not.80 Is this an OEM version of Windows 10 (like preinstalled on a notebook)? If yes, the driver needs to be signed by Microsoft as well to work and that's why you are seeing an error. Will do that of course for future versions after testing was successful. Also check the system time, it needs to be correct, not like in the year 2088.

-

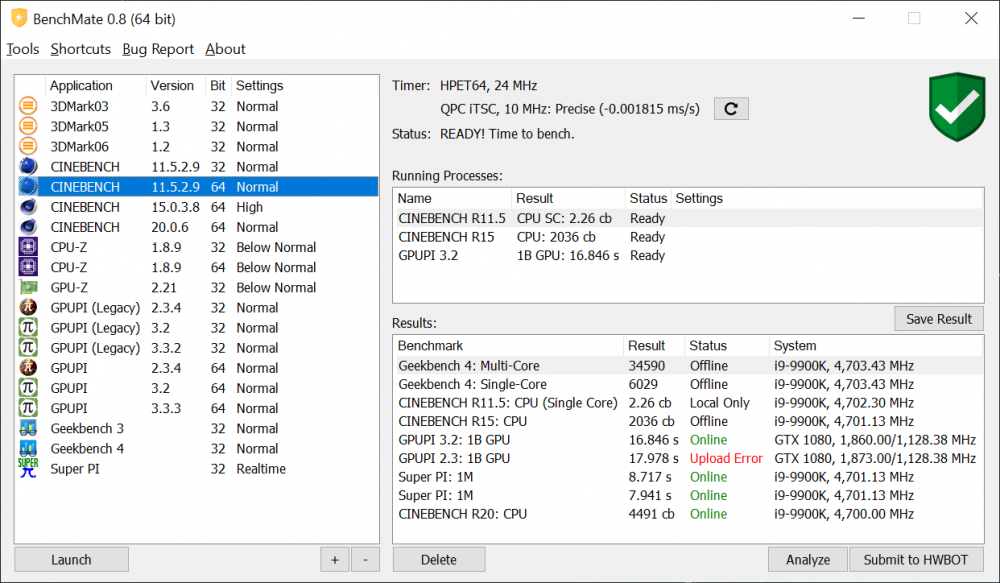

@Leeghoofd To make the testing experience better I suggest to add benchmarks used for the stages as beta benchmarks categories to HWBOT. I already did this for SuperPi 1M and Cinebench R20: https://hwbot.org/benchmark/superpi_-_1m_with_benchmate/ https://hwbot.org/benchmark/cinebench_-_r20_with_benchmate/ I will add the others as well, if that's alright with you. With version 0.8 you will then be able to click on "Submit to HWBOT" on the bottom right and the result is online. After the competition these results could be move to their real categories, if the testing was successful of course. @Lucky_n00b I will add a donation link to the next release! Please don't sell any of your stuff, better bench it with BenchMate! ?

-

Interesting screenshot for sure. Seems like BenchMate wasn't able to wait for the invalidated, old dialog position before the screen was captured. I have changed the result dialog's behaviour now to always move back to the bottom right position of the screen. The position of the result dialog is fixed because I think it's bad design to search the screenshot each time for the same information. It's even worse of course when comparing multiple screens, which we all do very often I'm sure. Just reposition all other items, the result will be the same. Benchmark to the top left, CPU-Z windows to the right. Long term I think it's reasonable to remove the need for CPU-Z and GPU-Z windows on the screen. It's very time consuming and it shouldn't bring any extra information to the table. If it still does we should integrate it into the result window. So there will be much more space available in the future.

-

I'm currently testing the new version on several platforms. Successfully tested it on TR4 on my bench OS (for reviews): https://hwbot.org/submission/4191705__mat__cinebench___r20_with_benchmate_ryzen_threadripper_2990wx_10984_pts Don't be shocked about the world record, I've added a beta benchmark category that's called "Cinebench R20 with BenchMate" to make direct uploading to HWBOT possible. Nonetheless we will find your problem. BenchMate 0.8 brings a fresh new Bug Report Tool, so bugs can be easily but also thorougly reported just by a few clicks.

-

Ryzen Points Disabled Submissions Still Affect The Ranks

_mat_ replied to Splave's topic in Submission & member moderation

Reviews and first benchmark results show base clocks from 99.8 to 102.4 (screen below, from the Computerbase review - doubt they changed much in BIOS). So I guess it highly depends on board and BIOS, as always. Would also make sense to up it to get a little bit more boost for the infinity fabric, am I right? Otherwise it seems to be always 33 MHz steps. Well, we'll see. @flanker, what would that proof? That the timer has not been skewed at some point but nobody knows exactly when and if that was during the benchmark run? BenchMate 0.8 will come out tomorrow. We should find out what we need to make things right and add them. Tests will tell. -

Lucky_n00b - Ryzen 9 3900X @ 5200MHz - 4076 cb Cinebench - R15

_mat_ replied to flanker's topic in Result Discussions

No, I am pretty sure it's not. I don't have a sample to test this, but I doubt that the situation has changed in any way. So only those that use HPET like GPUPI and X265 should be allowed. Latest 3DMarks Fire Strike+ should be safe as well. At least until it's proven that RTC/TSC is not skewed. -

Lucky_n00b - Ryzen 9 3900X @ 5200MHz - 4076 cb Cinebench - R15

_mat_ replied to flanker's topic in Result Discussions

No, unstable and slow, Win 10 is the only option for Ryzen 3000. -

First Ryzen 3000 score with BenchMate, awesome! CB R15 can't be uploaded yet, only GPUPI (I should put that in my signature, have to write this too often currently ). You shouldn't upload the hwbot file of course, it can be uploaded at a later point when BenchMate is integrated. The video can't proof that TSC/RTC is not skewing, so following the currently active HWBOT rules your scores are technically not allowed. But the CB screen above would have shown skewing, so that hints that your scores are okay in fact. I know, the Win 10 situation sucks, but I hope it's not for much longer.

-

The file you are getting an error from is just part of the normal Cinebench package. You can skip these files, they are just sample results shipping with the benchmark. You can only upload GPUPI results for now. HWBOT can't handle other benchmarks yet because they need to be integrated. Hopefully soon.

-

The compute test of Geekbench 4 is now fully supported with version 0.8, coming asap. With 0.7.x the software could not distinguish between CPU and Compute score. As Leeghoofd said, uploading to HWBOT will not be possible until BenchMate is officially integrated into HWBOT. It's no biggie, the benchmark category just needs some configuration on the bot side.

-

Only GPUPI can be uploaded as of now, HWBOT still needs to support the other categories. CB bugs will be fixed with 0.8. Only OpenGL will not be supported.

-

The truth is that benchmarks should have been properly analyzed for (at least obvious) attacks and timer reliability issues BEFORE points are given. Testing some systems and discussing the results is simply not enough. The outcome is a very painful decision to remove points of a benchmark too late in the game. That hurts the the bot, its community and competitive benchmarking in general. So BenchMate is (among other things) a last ditch effort to save at least some of the legacy benchmarks from the very same painful decision. Can every benchmark be saved? Yes. With a lot of time and effort, you can fix even the worst pile of shit code. But currently BenchMate focuses on benchmarks that were either easy to fix or in my opinion worth the troubles (globals/popular/not a lot of bugs/works on modern hardware/ ...). If you think a legacy benchmark (not actively developed) is missing, please provide arguments. About the costs, BenchMate needs about 1000 USD/year for licenses and certificates. It would be nice if that sum would not have to come out of my pocket in the long run.

-

The concept is to always have BenchMate's verification on the bottom right of the screen. The eye will rely on the fixed position and it's possible to verify the screen faster. So it's better to move around the CPU-Z screens instead. As for the CPU-Z windows, I know that there will still be a big decision to make here, but I don't think that opening a few CPU-Z screens is a solution at all. If it's really necessary to verify the scores by CPU-Z instead of HWiNFO, we should integrate the CPUID SDK into BenchMate. For now HWiNFO offers the best detection for CPU and GPU in combination for the smallest price. Otherwise we'll need two different SDKs (CPUID and HWiNFO/GPU-Z SDK) to do both and definitely a higher budget to make that happen. Don't forget that we are talking yearly licensing costs here. We should discuss this in full with the next release, 0.8. It will be the first public release and I will properly announce it here in the forums.

-

Pifast itself is very easy to rescue and I will support it in the long run. Due to the missing user interface (it's executed/configured by a batch file), there is some extra work to do there to make it safe. Or just build a GUI because why not? But that won't happen until the 10th. I'd recommend one of the benchmarks that are currently supported. With BenchMate 0.8 there will be some additional benchmark categories: Geekbench 4 - Compute Cinebench * - CPU (Single Core) <= currently not listed on HWBOT New version will come asap, it also adds some very important fixes and a brand new timer skew detection.

-

I have dissected wPrime months ago and decided that it can't be rescued. It's an awful benchmark written in Visual Basic 6 with so many flaws, that it would be easier to just write a new benchmark. I recommend to let this one rest in peace.

-

Let's do this! I am working on 0.7.2 right now, fixes a few bugs and also has a few improvements. CINEBENCH and Geekbench integration was reworked as well.

-

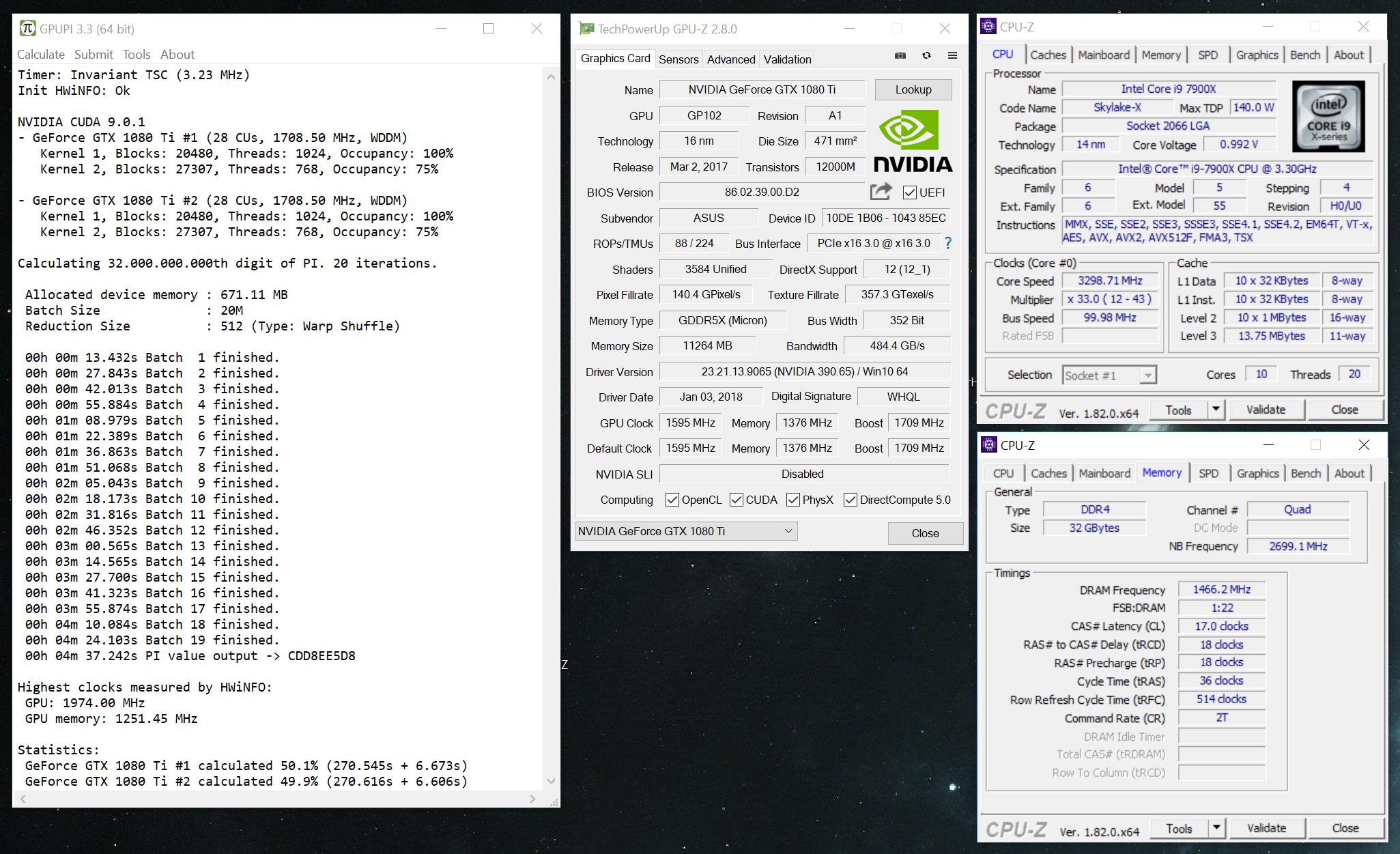

All GPUPI 3.3 releases are allowed for online (and offline) submission. What's the category of the result you are trying to upload? Like GPUPI for CPU 3.3 100M or GPUPI 3.3 1B (GPU)?

-

Interesting. Thx for the update.

-

vcruntime140.dll is the Visual C++ 2015 runtime, necessary to run all executable made with Visual Studio 2015 and C++. You can download it here: https://www.microsoft.com/de-at/download/details.aspx?id=48145 Select the 64 bit version (x64) for the default benchmark. 32 bit (x86) is needed for 32 bit executables, for example GPUPI's legacy version. But that should only be used for Windows XP and very old GPUs/CPUs. biocredprov.dll is an OS issue that has nothing to do with GPUPI.

-

XTU always had a 32 and 64 bit inner benchmark executable that was correctly chosen by the bitness of the OS. So that has nothing to do with the improved performance. The reason for increased scores is that this new version now uses Prime95 29.4 while the old uses the slower 27.7. Regarding benchmark security and timer reliability Intel opened up another can of whoop ass by using QueryPerformanceCounter() for timing. As I have not seen any counter measures that take care of timer skewing, changing your bclock in Win 8/10 should change the score. The old version had a "fix" for that behaviour by calculating the TSC frequency on a regular basis, so it successfully adapted to bclock changes as well. Also, NOT A SINGLE ONE of my flaws was addressed with the new version: https://www.overclockers.at/articles/intels-xtu-analyzed

-

GPUPI reports the correct number of devices, there is no upper limit for the submission. Seems like the bot is not categorizing the (old?) results correctly. I always thought that these Multi-GPU categories are somewhat off. To have them for one to four cards seems necessary, but otherwise there should be an "all out" category rather than those mostly empty ones in between.

-

This is normally the solution. As far as I can see on your screenshots you are not installing the AMD OpenCL SDK 2.9.1 or 3.0, but an old legacy AMD display driver. Try this download: https://www.softpedia.com/get/Programming/SDK-DDK/ATI-Stream-SDK.shtml

-

GPUPI 3.2 and 3.3.3 woes on X79 platform

_mat_ replied to Leeghoofd's topic in HWBOT Software and Apps

@Leeghoofd, those are two very different errors. Crashes while saving the result file are hardware detection problems, they have to be looked at from platform to platform and from GPUPI version to GPUPI version. GPUPI 3.2 is less stable than 3.3 in that regard, but that's what newer versions are for I guess. Not getting one loop out of it seems to be a fatal error error during the kernel compilation phase. GPUPI 3.3.3 is more optimized and uses less common functionalities of OpenCL. Some of those seem problematic in combination with certain platforms and drivers. Both problems will be fixed with GPUPI 4 because the hardware detection has been improved (as with every version) and OpenCL will no longer be used for CPUs (so no compilation phase). I will NOT fix any of the GPUPI 3.x versions, they are dead. I can not bugfix two different versions, it is not possible with my already limited time at hand. So all fixes currently go into GPUPI 4, which will be released after BenchMate.