-

Posts

1000 -

Joined

-

Last visited

-

Days Won

41

Content Type

Profiles

Forums

Events

Blogs

Everything posted by _mat_

-

_mat_ - Ryzen 7 2700X @ 3530MHz - 1566 marks XTU

_mat_ replied to zeropluszero's topic in Result Discussions

I reported it in autumn 2017 and detailed the vulnerabilities to Intel and Futuremark. It was full disclosure, I talked to the XTU devs and some 3DMark devs. Massman accompanied the process and we were searching for a solution back then. He knew that this was (and is) an important step for HWBOT to validate results, even tried to finance it and helped wherever he could. Sadly it was too close to his departure so I haven't come up with a viable solution at that point. Yes, I think that HWBOT is responsible for the quality of benchmark submissions. I don't criticize the manual moderation process/your work. You are dedicating a lot of effort into this and it's very much appreciated. But if you want HWBOT to be still taken seriously as a platform to host and validate world records, rankings and comparable results you should at least not ignore the fact that there is a problem. You don't even need to come up with a solution, but accept it, include yourself in the discussion and contribute if you can. To blame it on overclockers in general is NOT the solutions. Most play by the rules, buy expensive hardware and bench for hours to get credit for their achievements. But a single "OnePageBook" devaluates these efforts just by editing a screenshot in what ... 3 minutes tops? That's a problem that overclocking suffers from since I can remember. Let's try to solve it together. -

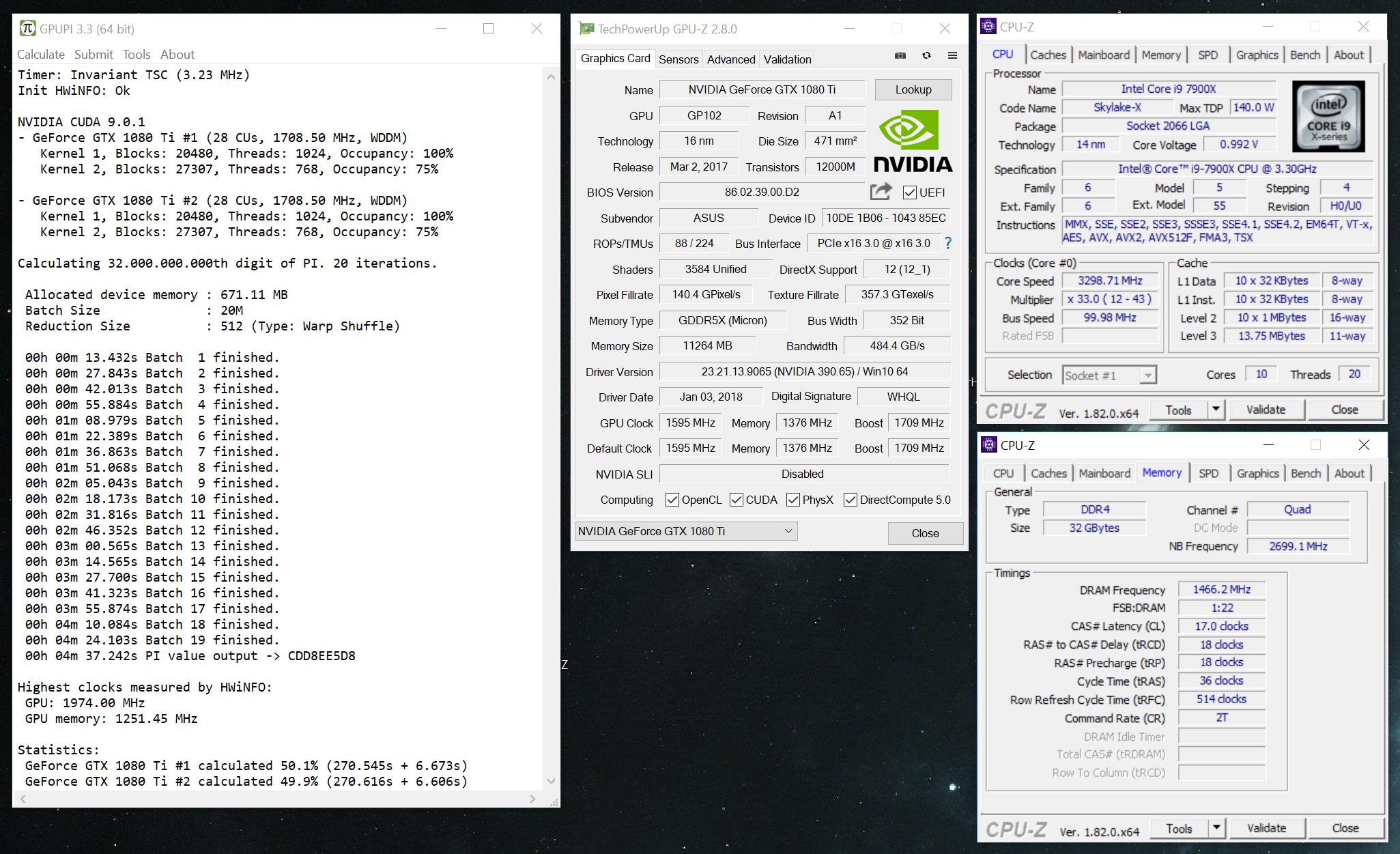

H2o vs. Ln2 - 4x Titan V @ 2100/1080MHz - 0sec 991ms GPUPI - 1B

_mat_ replied to a topic in Result Discussions

This is GPUPI 3.1.1, so it's valid for this category. GPUPI 3.3 was not released until April. -

_mat_ - Ryzen 7 2700X @ 3530MHz - 1566 marks XTU

_mat_ replied to zeropluszero's topic in Result Discussions

If I would do something like that, it would be one additional application that runs next to the legacy benchmarks. It injects proper time functions into the executable so it will be able to use my Reliable Benchmark Timer driver. It's kind of an emulation for week Win32 API timer functions. The wrapper could also handle screenshot taking and uploading, some security checks and hardware detection. All that would need some changes in the HWBOT submission or - and that's something I would prefer - its own validation site. It will show a screenshot with a watermark that adds a timestamp and some hardware information that has to match the uploaded values on the site. Will need something similiar for the new GPUPI 4 as well, so basically it's something I am going to do. Without the screenshot watermark of course, that's a workaround for legacy benchmarks. The big problem with this is, that some benchmarks need Windows XP to perform at their best. On XP there is no such thing as a reliable timer and I won't write an alternate driver for some minor security checks. The application could work though for screenshot timestamping to have at least some validation. But I need to think that over. Another question: Would anyone here donate for a project like this? -

_mat_ - Ryzen 7 2700X @ 3530MHz - 1566 marks XTU

_mat_ replied to zeropluszero's topic in Result Discussions

The reasons why I am publishing this right now: 1) I have first talked to benchmark vendors (including Intel) and they know about these problems, yet they do nothing. 2) HWBOT knows about this, yet there isn't even an official statement on the current state of benchmark security. 3) I've shown this on the HWBOT forums and nobod really cared To sum it up: Nobody cares when actually everybody involved should. Benchmarks can't be taken seriously in their current state. There are not enough security measures implemented, the way timers are used is unreliable and the concept of handling results and hardware information is just plain wrong. I can go into details if anybody is interested. Additionally every benchmark developer is on it's own to implement the necessary "features" above. That leads to inconsistent quality/reliability of the benchmarks and their results. This was made pretty clear after the time skewing debacle and the mess it has left. But also smaller "attacks" have followed like the Nehalem dual socket phenomenom where people really believed that those old Xeons were world material. These problems hit hard because old benchmark versions have to be excluded from ranking or legacy benchmarks get more difficult to moderate. All that leads to trust issues among overclockers, especially new ones. And that's totally understandable because even I am coming across GPUPI results that I can't comprehend. It's not only about cheating but about trusting the mechanisms of the benchmarks, the hardware information gathering and the timers. So we compare the peformance of hardware with benchmarks that are not built with reliability in mind. Even with GPUPI 3 I am right now only chasing problems, not preventing them in the first place. So what needs to be done is a uniform mechanism for timing, result handling and hardware detection that all benchmarks can use. The validation logic needs to be transfered to the submission server as well, so decisions for result exclusion can be done at any point, even for the past. So why am I showing this? Because things need to be taken seriously from all sides and have to be changed for the better. -

_mat_ - Ryzen 7 2700X @ 3530MHz - 1566 marks XTU

_mat_ replied to zeropluszero's topic in Result Discussions

I will eventually. But first I want you to know the full implications of this tool. CPU-Z will take a hit as well, although not every platform. Skylake X can't be helped though: https://valid.x86.fr/5qy93f -

You will be dearly missed in Austria of course, but as you well said: Why be part of a team when you are the only one in it. Sad but true, overclocking is pretty much dead in Austria. Have fun and kick ass at OCN.

-

gpupi legacy issue

_mat_ replied to Remarc's topic in HWBOT Development: bugs, features and suggestions

Open the debug log (Menu => Tools => Debug Log) and post a screenshot or the output of the log when the crash appears. The way it sounds to me it has nothing to do with HWiNFO. Especially when it's disabled, everything is done with WMI instead (a normal windows feature). -

Forget Linde, they are expensive and mostly idiots. I am pretty happy with Messer in Austria/Vienna. They also have locations in Germany. Seems like the nearest is 45 km: https://www.messer.de/gasepartner They provide transport to the doorstep as well without extra payment. Cost is less than 1 Euro per liter, can't remember exactly. For a show with 200 liters it's about 150 Euros including several leased containers. You can also try Air Liquide. Had some good experiences there as well (in Salzburg though).

-

gpupi legacy issue

_mat_ replied to Remarc's topic in HWBOT Development: bugs, features and suggestions

Try to disable HWiNFO support in the dialog before the run and let me know if it works. -

Casanova, this is in no way intended and should not be the message taken away from my post. The truth is that I have been researching on timers and benchmark security in general for quite some time now and found multiple issues. The issue shown in the video is just one of those, although it's a pretty tough one. It's important to know that not a single bit of executables, drivers or OS code was edited to achieve these results. What has changed is how the system is perceived through the eyes of the benchmark. It's just not reliable the way it's done currently. What really should be taken away from this is the fact that this needs attention, needs to be changed and fixed. And it's not only one thing. Edit: Just to clarify, HWBOT knows about these problems since last year.

-

I think the new points system is not a good solution because it doesn't motivate to compete in all benches equally. In fact the whole idea of "the more subs the more points" is shifting the interest towards certain benches where it is next to impossible to compete against the well known overclockers while others are not benched at all for obvious reasons. A fair points distribution system could make it easier to compete in the long run which could attract new benchers. There is also the big topic of trust issues. Too many benchmarks can be easily tricked because the screenshot is the only source to judge by. These benchmarks need to be updated or removed. Although this is a very painful process, it is necessary for extreme overclocking to be taken seriously. But that's not all, the following things need change in my opinion: Timing and result handling have to be unified! Benchmarks are currently horrible at this, some are better some are so easily cheatable it's a joke. The submission system should have a SDK, so it can be easily integrated into benchmarks and updated in a unified way when changes are needed. HWiNFO, a unified timer and result handling have to be a part of this SDK. The benchmark just feeds the right values to it and fires the submission process. The logic/validation of submissions have to be server-side. The benchmark can do it on its own to improve user experience but the server decides if this is a valid score. This would allow old benchmark versions to be valid in certain situations and would have circumvented the timer skew bugs in Windows 8 and higher. The next bug will come along sometime and the already difficult OS/hardware/benchmark combination will end up as a clusterf***. Benchmarks should not bother with vendor and device names of hardware, because there is always a problem with matching the local and server names. The benchmark will therefor only send the vendor and device ids, the server translates them back into readable names. Benchmarks should be thorougly inspected by someone with knowledge of reverse engineering and locating serious security issues. Together with the SDK submission integration this could end up in a certificate of some kind. I know that this is a lot of input on benchmarks, but don't forget that benchmarks are the main focus of overclocking. Without them there is nothing to measure so they are key to the overclocking business. To understand how bad the situation currently is I decided to finally release the following video to the public. This is a tool I wrote in not even a day that completely screws with CPU-Z and XTU in an undetectable manner. I won't go into technical details for now for the sake of extreme overclocking in general. With GPUPI 4 I already found a way to fix these security issues (and lots of others).

- 86 replies

-

- 11

-

-

-

-

-

The performance of the new versions in the GPU categories will be the same. The CPU categories are a different chapter as there will be a native AVX path that should be faster than OpenCL on modern CPUs. So yes, my recommendation will be to rename the 3.3 categories to GPUPI 4. Just Like Geekbench.

-

GPUPI 3.3 will be discontinued, so no, there won't be a direct upload to the new benchmark category. BUT there will be good news soon. I am currently working on GPUPI 4 which will introduce a whole new foundation for timing and handling of benchmark results. I won't get into it right now, but I can guarantee some surprises. I also have the intention to maintain GPUPI 3.2 by releasing a minor version until GPUPI 4 gets out of the beta phase.

-

The problem is that the GPUPI 3.2 Legacy version is compiled with CUDA 6.5 to be able to be compatible with as many NVIDIA cards as possible (all that support double precision calculations in fact). But CUDA 6.5 will never be as fast as GPUPI 2.3.x with CUDA 8 support. Same goes for GPUPI 3.x with CUDA 9 btw. Well, it seems like this will be a huge problem with continued support for new graphics card architectures and especially new CUDA versions. But I may have already found a way to tackle this with runtime compilation or by running PTX assembly directly. I will have a look at this in the next few weeks, it will need a lot of testing. As for submission with GPUPI 2.x please read my answer here:

-

It just stops when compiling the kernels. Well, that's bad. Please try this beta version of 3.3.3 that adds a lot of detailled debug log messages to the initialization of CUDA and OpenCL: https://www.overclockers.at/downloads/projects/GPUPI 3.3.3 Beta.zip Please post the contents of the debug log, thank you!

-

Would definitely be one of the hardest GPU categories to give points, right? GTX 1080 Ti at 3000 MHz for over 4 minutes anyone?

-

Yes, it's cleaner code with improved comparability between different devices and the OpenCL path is now implemented correctly as it always should have been. The different OpenCL drivers produce closer results (although AMD OpenCL 1.2 = AMD APP SDK 2.9-1 is now the best choice in all categories), Batch Size and Reduction Size are not as picky as it was before and on NVIDIA cards the OpenCL implementation comes very close to the CUDA implementation, which indicates that everything is done right now. The bottom line is GPUPI is now much better as a benchmark in general. I would have done this with GPUPI 1 already, if I could have. But I wasn't good enough at OpenCL coding and mathematics back then (OpenCL is a brutal beast though). The good news is that something like this won't happen again. I will not touch the algorithm anymore, because it's pretty much maxed out the way I do it. The next step is an OpenMP path that gets rid of the OpenCL implementation, but that's many months away and will not overrule current results. The CPU path will be split into OpenCL and OpenMP (or Native, don't know yet), so no rebenching necessary. The new path will make use of AVX and whatever comes next to support the hell out of everything that comes in my way. I know the XTU coders and they don't seem to be interested in overclocking, let alone competitive oc. They just do their job and as far as my experience with XTU SDK goes, it's not entirely a good one (sry guys). I really try to do things differently with GPUPI. I want it to be on the bleeding edge too, but I wouldn't have introduced the speedup with 3.3 if everybody in this thread would have stood against it. As I already said: GPUPI should first and foremost be fun to bench.

-

The last bugfix release is already an hour old, so lets post a new one: GPUPI 3.3.2 Download here: https://www.overclockers.at/news/gpupi-3-is-now-official Changelog: Bugfix: Application crashed while saving a result file Bugfix: Some Intel iGPs could not compile the OpenCL kernels due to an incompatibility Bugfix: Application crashed on certain systems while benchmark run initialization (due to memory detection) The hardware detection of the memory manufacturer is a delicate process and can crash the application, so it will be skipped when running HWiNFO in "Safe Mode" Improved the error message when an OpenCL reduction kernel can't be initialized due to limited shared memory (only possible on weak iGPs) All open bugs should be fixed now! Thanks to everybody that helped to improve GPUPI!

-

Thank you! I already fixed it and it will be in the next bugfix release.

-

Thanks to the open Debug Log window I can narrow this down to the memory not being properly detected by HWiNFO. Can you please test GPUPI 3.2 as well? No screenshot needed, just a confirmation, that it's not working there too. Thanks!

-

Can you do me a favor please and post a screenshot with open Debug Log (Menu: Tools => Debug Log). Please open the log window before you are saving the result file. Is that possible or is the application instantly closed?