-

Posts

1000 -

Joined

-

Last visited

-

Days Won

41

Everything posted by _mat_

-

Just a quick heads up! Tomorrow I will release GPUPI 2.3 with multiple bugfixes and features. I am very happy with the new support of CUDA 8.0 plus serveral optimizations of the CUDA kernels that finally led to faster scores than the OpenCL implementation. Have a look at this score with a GTX 1080, it's top 10 on air cooling - and my sample doesn't really clock well: So hold your horses for now if you are benching NVIDIA cards with GPUPI.

-

Dancop - GeForce GTX 1080 @ 2645/1401MHz - 13sec 492ms GPUPI - 1B

_mat_ replied to raules009's topic in Result Discussions

Well, that was inevitable - GTX 1080 is the new champ in GPUPI! Congrats, Dancop! Awesome work! -

Nice and thanks for reporting back! Added both solutions to the FAQ.

-

Awesome work and congrats!

-

No, it's not necessary. OpenCL works out of the box when installed on the system.

-

I don't know what Geekbench really does when benching memory. If it's mostly a bandwidth test, it should be affected as well. The gap between 4/512 and 1/64 says a lot. The more the batch size is adjusted to the architecture itself, the faster the bench will be. That's because the workload is aligned to the maximum worksize, that can be run in parallel. 4M seems to be the best choice for the 6100 with 2 Cores/4 Threads. About the same is true for the reduction size. The bigger the better, because 512 means that 512 sums will be added up to one until only one sum remains. Lets say that we want to sum up 8192 single numbers, that would be: step 1: sum of 512 numbers, sum of 512 numbers, ... (16 times) step 2: sum of 16 numbers = result Where as the reduction size of 64 would produce: step 1: sum of 64 numbers, sum of 64 numbers, sum of 64 numbers ... (128 times) step 2: sum of 64 numbers, sum of 64 numbers step 3: sum of 2 numbers = result If you consider that GPUPI produces billions of partial results, that need to be added up, then 512 also needs a lot less steps in general to sum up the batches after they are calculated. Additionally the bigger the batch size, the less reduction process have to be made for the calculation. So these two values mean a lot for the whole computation.

-

Have you tried a memory benchmark yet? Try AIDA64 to test your bandwidth, it should be impacted as well: Overclocking, overclocking, and much more! Like overclocking. Otherwise it's a driver issue, but I doubt it. We never had an efficiency problem with the memory reduction before. It's btw a very common technique for summing up a lot of memory in parallel. The pi calculation itself depends on much more to be efficient.

-

What do you exactly mean with crashing? Runtime assertion? Please specify the exact error message and have a look at GPUPI.log as well. A wrangled OpenCL driver is btw much more common to produce such an error. Please uninstall any driver and reinstall again.

-

And it has to be enabled in Windows, see the GPUPI FAQ section again, you'll find a command line statement to switch to HPET. But that has nothing to do with your problem. It's just a more precise timer, but only for a few ms of the measured end time.

-

Unless that service kills your memory bandwidth, it should cause no trouble. Try other memory benchmarks as well to see if the performance is en par. I am still leaning more towards a hardware problem, not a driver or software issue. Also have a look at your NB frequency, 5900 MHz is very high.

-

Your reduction time is completely off, that's the second time listed in the statistics. Others have about 10 seconds, maybe 15, yours is 87 seconds. So check the memory for faulty reads and writes, maybe it's overclocked too high.

-

Post the score please, I'll have a look. Btw, normally you should not use the Intel OpenCL drivers. Use the AMD OpenCL drivers, they are much faster on Intel CPUs as well. If you don't know how to install it, please take a look at the GPUPI international support thread: https://www.overclockers.at/news/gpupi-international-support-thread There is a FAQ section that has a link to the AMD APP SDK, if you don't have an AMD graphics card.

-

What's your Intel OpenCL driver version? Any special batch or reduction sizes or is everything at default (20M, 256)? I currently can not reproduce the error.

-

Alex@ro - Core i7 6700K @ 6620MHz - 3min 56sec 825ms GPUPI for CPU - 1B

_mat_ replied to a topic in Result Discussions

Awesome job, congrats! -

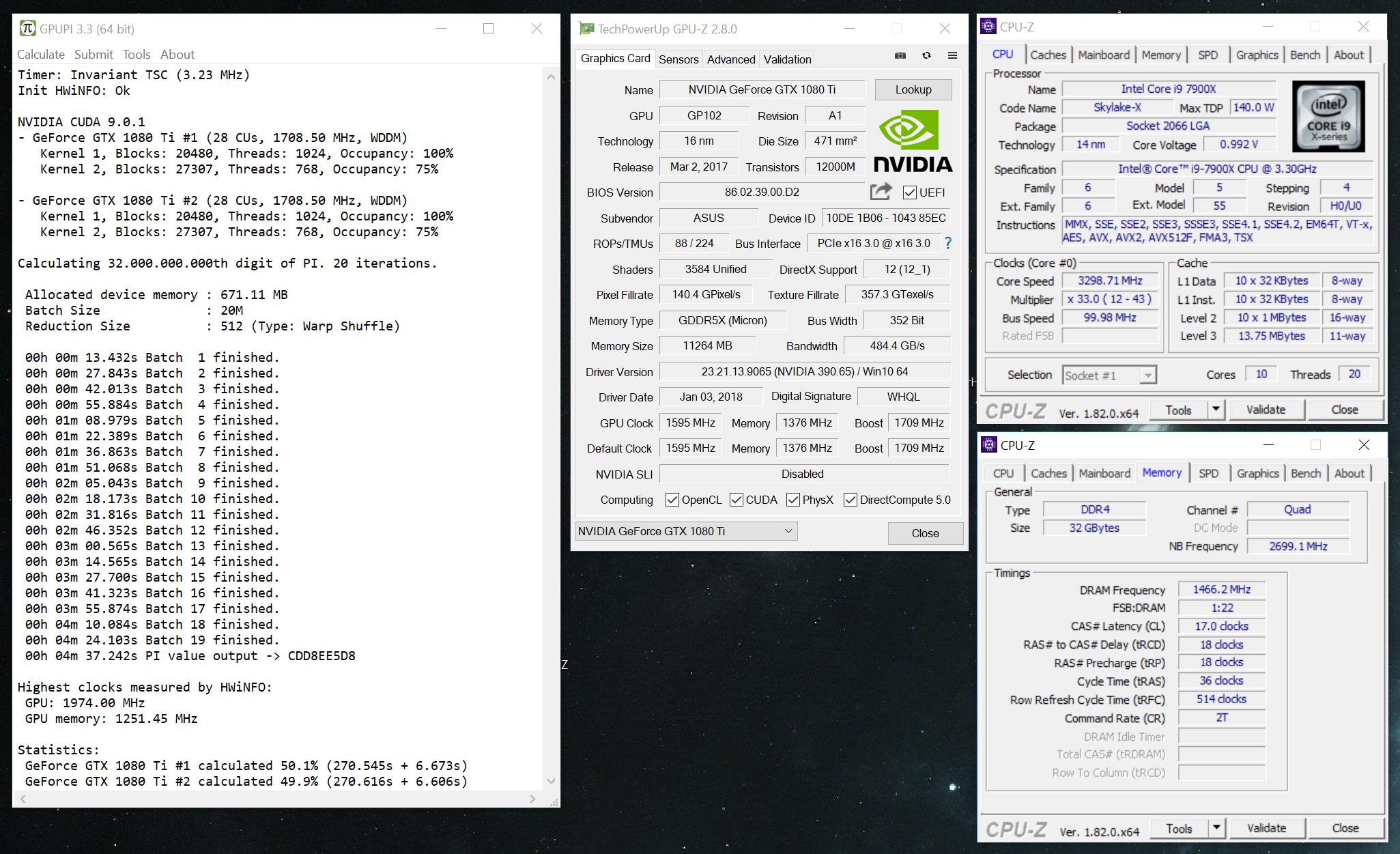

That's exactly how it's already done. I've discussed this with Massman as well before launching the multi GPU version. It's also easy to prove: The statistics listed after the result give insight into the performance of each GPU. For automatic submission with successfully detected GPU, the benchmark already does this on its own. I think it's the best option by far, also for DX12 benchmarks coming soon. Otherwise there could be a "nobody cares about your GPU configuration" category, but I guess that won't be too exciting for slower and older cards. If it helps, I can add more information about this rule inside the benchmark. But this should be properly discussed and officially enforced as soon as possible. GPUPI won't be the only benchmark to allow mixed cards in the near future!

-

The official Y-Cruncher Beta Competition Discussion

_mat_ replied to Massman's topic in HWBOT Competitions

Glad you got it working and nicely done as well! You have to run the wrapper with administrator rights to call bcdedit, am I correct? That's why I decided to just give a link to my FAQ section. -

If the detection is saved within the file, there is no way to undo this without having the private key to decrypt the data. And HWBOT will hate you for submitting CPUs or GPUs that can not 100% be matched with the database, and therefor neclect you like you never ever benched a single system. It's a dangerous game, so when benching new or strange hardware better skip the detection.

-

The official Y-Cruncher Beta Competition Discussion

_mat_ replied to Massman's topic in HWBOT Competitions

No kernel mode driver necessary. Just avoid certain timing methods on some windows version to avoid clock skew. I introduced this with GPUPI 2.1 and wrote a small article including some tests: https://www.overclockers.at/articles/gpupi-2-1 Let me know if you need any kind of help. And keep up the good work! -

The detection of AMD graphics cards is sometimes off, because they relabel the cards with the same GPUs and Compute Units and do not expose the name of the model itself within the APIs. But this could be a bug in GPUPI, because the R9 285 has a different CU count than the R9 380X, although both are shown with the codename Tonga. I will have a look at this and fix it, if necessary. Until then please uncheck the "GPU detection" on the submission dialog. This will send you to an intermediate site on HWBOT, where you will be able to enter the graphics card manually.

-

You are running 100M on the GPU, which is fine but not a HWBOT category. Select 1B or 32B for graphics cards and 100M and 1B for CPUs. After a valid result the menu items for submission will be unlocked.

-

NAMEGT - Core i3 6100 @ 6400MHz - 8min 9sec 182ms GPUPI for CPU - 1B

_mat_ replied to Achill3uS's topic in Result Discussions

6.4 with 1.95 volts? Bunny yeah! -

OLDcomer - >4 Radeon R9 290X @ 1280/1500MHz - 2sec 502ms GPUPI - 1B

_mat_ replied to Leeghoofd's topic in Result Discussions

Awesome project and congrats for the well earned world record! -

Invalid result means your hardware is not stable. Even the slightest miscalculation in the first 27 decimals in just one of the billions of partial calculations can output the wrong result. Sometimes I wonder that it even works so well with overclocked cards.