havli

Members-

Posts

398 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Events

Blogs

Everything posted by havli

-

Not that it matters for me... but this seems like very lazy fix. Converting the old input format to the new one is very basic math and easy implementation - 10 lines of source code at most. And if users need to convert score to seconds by themselves.... a good way to make them leave.

-

Enabling HWBOT X265 to run on Asus TUSI-M (SiS 630ET)

havli replied to sukhoikip's topic in HWBOT Software and Apps

Any platform older than AM2 or 775 is very unlikely to support HPET. So for pentium 3 version 2.2 is the way to go and IIRC you might need to enable legacy mode too. Link for downloads should be all here http://hw-museum.cz/article/1/hwbot-x265-benchmark/3 -

[Suggestion] Change how memory global and hwpoints are awarded

havli replied to ground's topic in HWBOT 2021 Edition

I proposed something similar maybe 10 years ago.... nothing happened. So maybe this time. -

I tried my best to have correct info on the hw-museum site. Hovewer GPU-Z doesn't detect the NV41/42 core properly. Therefore GF6800 GS is a huge mess here on hwbot.

-

Hwbot x265 benchmark has non-normalized download links.

havli replied to a topic in HWBOT Software and Apps

hw-museum.cz is the homepage of the x265 bench, so all downloads there should be correct. Anything else, I can't guarantee. -

aquamark score correct but GFX/CPU subscores looks weird

havli replied to Matsglobetrotter's topic in HWBOT Aquamark³ Wrapper

Constant, it happened every time on this machine. Also with different GPUs - GTX 670, HD 5870. -

3dMark 06...no points please reculate

havli replied to Sweet's topic in Submission & member moderation

Speaking of scores to analyze - this one might help too https://hwbot.org/submission/4939482_havli_aquamark_geforce_gtx_660_oem_466076_marks It was originally detected as regular GTX 660. After I edited it afterwards, I only gives 2 points. -

aquamark score correct but GFX/CPU subscores looks weird

havli replied to Matsglobetrotter's topic in HWBOT Aquamark³ Wrapper

I have similar problem, here https://hwbot.org/submission/4939482_havli_aquamark_geforce_gtx_660_oem_466076_marks The score file is attached. 466076.hwbot -

I have new rig for benchmarking old GPUs. i7 8700K @ 5.4 GHz, ASRock Z370 Extreme4 and 2x8 GB DDR4 4100. Now the problem is, I can't get proper 3DM01 scores with this system. Few years ago I had 7700K with Maximus VIII Ranger and the system was somewhat faster despite lower CPU clock and much slower memory. As far as I can tell, the drivers and settings are the same now like I had back in 2017. The winXP install is different, but that canť make the difference or can it? All the CPU limited tests (especially CH, DH, LH) are slower on Z370. So for instance these scores. https://hwbot.org/submission/3527475_havli_3dmark2001_se_radeon_hd_5850_137805_marks https://hwbot.org/submission/4940810_havli_3dmark2001_se_radeon_hd_5870_134006_marks https://hwbot.org/submission/3525093_havli_3dmark2001_se_geforce_gtx_760_(256bit)_142921_marks https://hwbot.org/submission/4938985_havli_3dmark2001_se_geforce_gtx_660_oem_133059_marks All other legacy benchmarks perform better on the 8700k (as expected), but not 01. Is there something I am missing, or simply some boards are for some reason slower and nothing can be done? Thanks for any tips.

-

I am returning to benchmarking after few years break. Is it allowed to bench two different GPUs - for instance GTX 780 + GTX 780Ti and submit it as 2x GTX 780Ti? I know it was ok in the past... but not sure how is it now. Thanks.

-

It seems there are some bugs with the new points calculation. For example - I submitted this one just a while ago - it was missdetected as Xeon E5 1607 v3. So I edited to E5 1650 v3 - but now it messed up the ranking and shows 15 points instead of 6... or something like that. https://hwbot.org/submission/4930144_

-

There is no artificial limit to cores/threads. Perhaps the encoder doesn't scale with so many cores.... it is 4 years old after all.

-

Perhaps some Windows tweaks, but that is just a wild guess. I never liked tweaking OS.

-

Javaw.exe is just the benchmark GUI, this one should be left as it is. It has no effect on the performance. When you launch the benchmark run with overkill enabled, then 2 or more instances of the x265 encoder will show up in the device manager and these should have the selected priority set. When running overkill with high(er) priority, the Windows scheduller might assing more system resources to one instance and less to the other - which results in one instance running faster and the other slower. If the score varies too much, then final score won't be valid.

-

Way too much FPS in X265 1080P 2.1.0

havli replied to unityofsaints's topic in HWBOT Software and Apps

I have no plans to add stress testing. There are plenty of better applications for this. -

I don't care what Intel advertise. The important part how the CPU actually runs. And unless your heatsink fall off or something... it will never run at base clock. See here https://www.techpowerup.com/review/intel-core-i9-9900k/17.html Always at 4.4 GHz or more. And depending on the board and bios settings, it might even run 4.8 all-core (with TDP of 200W). Yeah, 50% OC is possible on 9900k - but only with LN2 at >7 GHz. ?

-

Nonsense - 9900k never runs at base clock. Default turbo is 4.8 GHz... so OC to 5.1 GHz = +6.25%. This is how it works.

-

I think it is not that difficult or expensive to get dice pot. But buying the dice itself is rather expensive - last time it cost me $40 for 10 kg. Also it takes a lot of effort to get decent subzero score in general. Most likely because of that not many people are willing to do that... And while water cooling for example is much easier to run, unless you have really golden CPU/GPU you won't get competitive score anyway.

-

The official Country Cup 2019 - 10th Anniversary Edition thread.

havli replied to jpmboy's topic in HWBOT Competitions

Well, I consider myself retired from overclocking... so I don't really care what is considered server and what isn't. ? But as long as you run C2Q or Core i7 in these boards, it should be desktop enough. https://www.asus.com/Motherboards/P5K_WS/ https://www.asus.com/Motherboards/P6T_WS_Professional/overview/ -

The official Country Cup 2019 - 10th Anniversary Edition thread.

havli replied to jpmboy's topic in HWBOT Competitions

No need to OC PCI when you can run it at 66 MHz in the PCI-X slot. 775 boards with PCI-X definitely exist and I think 1366 too. -

The official Country Cup 2019 - 10th Anniversary Edition thread.

havli replied to jpmboy's topic in HWBOT Competitions

You can run PCI GPU in PCI-X board, perhaps it will be somewhat faster. -

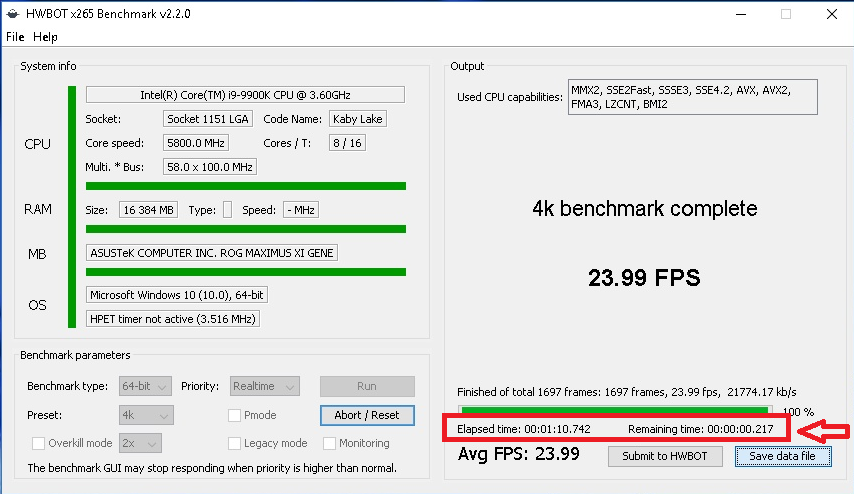

You mean the elapsed and remaining time? Elapsed time is measured directly by the wrapper, using Java nanoTime() function. And remaining time is prediction based on the amount of frames finished and actual framerate. The x265 encoder executable also measures time (internally, but reports it as fps). However this is the "wall clock time"... so less accurate and vulnerable to system time manipulation. In earlier versions of the benchmark, the score was taken directly from the encoder. But after some cheated scores were discovered I changed the time measuring to use nanoTime() and calculate the fps myself.

-

@_mat_ At this time I have no plans for future x265 development... Other than perhaps reuploading updated package with recent cpu-z version once in a while, like I did this year after Ryzen 3000 launch. The main executable is more than 1.5 years old and considering I see barely any problems or complains... it means either people don't care ? or everything works reasonably well. If you wish to integrate x265 into BenchMate using the DLL injection method, I have no objections. ? However doing new version with native BenchMate integration seems like to much work, especially with testing and validation (which I always did with each x265 version) on many different platforms, even the obscure ones and on each of them multiple OS. That is much effort and time which I don't want to spend this way.

-

Wasmachineman_NL - Quadro2 Go - 2421 marks 3DMark2001 SE

havli replied to a topic in Result Discussions

Old versions of GPU-Z should work in 2k. For example 0.4.6 worked for me https://hwbot.org/submission/1074470_havli_3dmark2001_se_radeon_7200_ddr_4634_marks -

Hint for the X265 4K Dual Core. Perhaps the locked Skylake i3 / Pentium / etc. with BCLK overclocking and disabled AVX might be faster than almost default with AVX2. But who knows...